Common Security Risks in Polkadot SDK Development

How to use the slides - Full screen (new tab)

Common Security Risks in Polkadot SDK Development

This presentation discuss common security risks in Polkadot SDK (Polkadot, Substrate, Cumulus, etc.) development, and methods to mitigate them.

Each security risk is composed of: Challenge, Risk, Case Studies, Mitigation and Takeaways.

Security Risks

- Insecure Randomness

- Storage Exhaustion

- Insufficient Benchmarking

- Outdated Crates

- XCM Misconfiguration

- Unsafe Math

- Replay Issues

- Unbounded Decoding

- Verbosity Issues

- Inconsistent Error Handling

Disclaimer

Apart from the mitigations suggested here, it is always important to ensure a properly audit and intense testing.

Specially, if your system handles real value assets, considering that if they are exploited, it could hurt their owners.

Insecure Randomness

---v

Challenge

On-chain randomness on any public, decentralized, and deterministic system like a blockchain is difficult!

- Use of weak cryptographic algorithms or insecure randomness in the system can compromise the integrity of critical functionalities.

- This could allow attackers to predict or manipulate outcomes of any feature that rely on secure randomness.

---v

Risk

- Manipulation or prediction of critical functionalities, leading to compromised integrity and security.

- Potential for attackers to gain an unfair advantage, undermining trust in the system.

---v

Case Study - Randomness Collective Flip

- Randomness Collective Flip pallet from Substrate provides a random function that generates low-influence random values based on the block hashes from the previous 81 blocks.

- Low-influence randomness can be useful when defending against relatively weak adversaries.

- Using this pallet as a randomness source is advisable primarily in low-security situations like testing.

---v

Case Study - Randomness Collective Flip

---v

Case Study - VRF

-

There are two main secure approaches to blockchain randomness in production today: RANDAO and VRF. Polkadot uses VRF.

VRF: mathematical operation that takes some input and produces a random number along with a proof of authenticity that this random number was generated by the submitter.

-

With VRF, the proof can be verified by any challenger to ensure the random number generation is valid.

---v

Case Study - VRF

- Babe pallet randomness options

- Randomness From Two Epochs Ago

- Use Case: For consensus protocols that need finality.

- Timing: Uses data from two epochs ago.

- Risks: Bias if adversaries controlling block production at specific times.

- Randomness From One Epoch Ago (Polkadot Parachain Auctions)

- Use Case: For on-chain actions that don't need finality.

- Timing: Uses data from the previous epoch.

- Risks: Bias if adversaries control block prod. at end/start of an epoch.

- Current Block Randomness

- Use Case: For actions that need fresh randomness.

- Timing: Appears fresh but is based on older data.

- Risks: Weakest form, bias if adversaries don't announce blocks.

- Randomness From Two Epochs Ago

- Randomness is affected by other inputs, like external randomness sources.

---v

Case Study - VRF

---v

Mitigation

-

All validators can be trusted

VRF

-

Not all validators can be trusted

-

Profit from exploiting randomness is substantially more than the profit from building a block

Trusted solution (Oracles, MPC, Commit-Reval, etc.)

-

Otherwise

VRF

-

---v

Takeaways

- On-chain randomness is difficult.

- Polkadot uses VRF (i.e. Auctions).

- On VRF (Pallet BABE), randomness can only be manipulated by the block producers. If all nodes are trusted, then the randomness can be trusted too.

- You can also inject trusted randomness into the chain via a trusted oracle.

- Don’t use Randomness Collective Flip in production!

Storage Exhaustion

---v

Challenge

Your chain can run out of storage!

- Inadequate charging mechanism for on-chain storage, which allows users to occupy storage space without paying the appropriate deposit fees.

- This loophole can be exploited by malicious actors to fill up the blockchain storage cheaply, making it unsustainable to run a node and affecting network performance.

---v

Risk

- Unsustainable growth in blockchain storage, leading to increased costs and potential failure for node operators.

- Increased susceptibility to DoS attacks that exploit the inadequate storage deposit mechanism to clutter the blockchain.

---v

Case Study - Existential Deposit

- If an account's balance falls below the existential deposit, the account is reaped, and its data is deleted to save storage space.

- Existential deposits are required to optimize storage. The absence or undervaluation of existential deposits can lead to DoS attacks.

- The cost of permanent storage is generally not accounted for in the weight calculation for extrinsics, making it possible for an attacker to fill up the blockchain storage by distributing small amounts of native tokens to many accounts.

---v

Case Study - Existential Deposit

---v

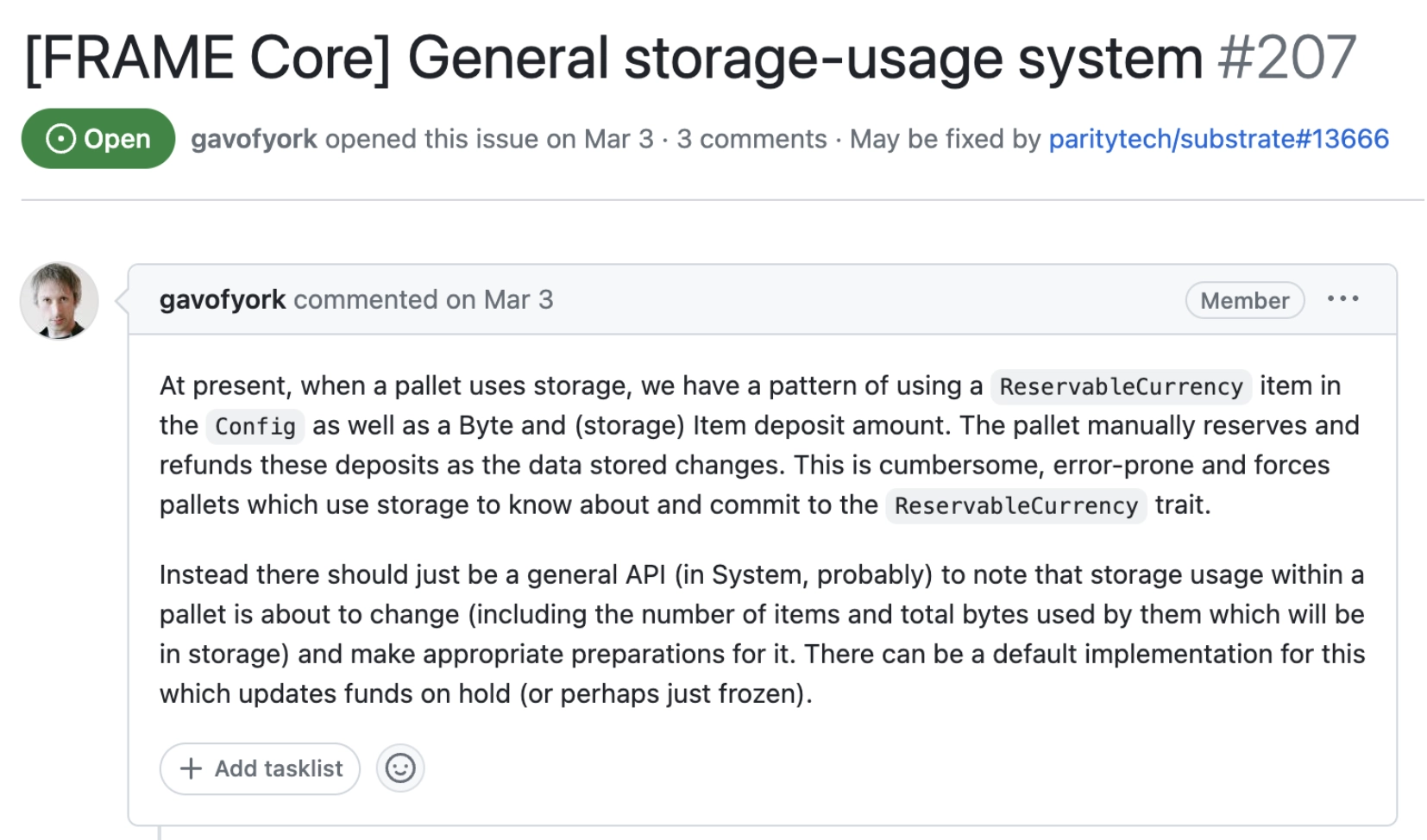

Case Study - General Storage Usage System

---v

Case Study - NFT Pallet Manual Deposit

---v

Mitigation

- Existential Deposit: ensure a value similar to the defined by the relay chain.

- Storage Deposit: implement a good logic similar to the following:

---v

Takeaways

- Always explicitly require a deposit for on-chain storage (in the form of Reserved Balance).

- Deposit is returned to the user when the user removes the data from the chain.

- Ensure the existential deposit is greater than N. To determine N you can start from values similar to relay chain and monitor users activity.

- If possible, limit the amount of data that a pallet can have. Otherwise, ensure some friction (reserve deposit) in the storage usage.

Insufficient Benchmarking

---v

Challenge

Benchmarking can be a difficult task...

- Incorrect or missing benchmarking can lead to overweight blocks, causing network congestion and affecting the overall performance of the blockchain.

- This can happen when the computational complexity or storage access is underestimated, leading to inaccurate weight for extrinsics.

---v

Risk

-

Overweight extrinsics can slow down the network.

-

Leads to delays in transaction processing and affects UX.

-

Underweight extrinsics can be exploited to spam the network.

-

Leads to a potential Denial of Service (DoS) attack.

---v

Case Study - Benchmark Input Length - Issue

---v

Case Study - Benchmark Input Length - Mitigation

---v

Mitigation

-

Run benchmarks using the worst case scenario conditions.

For example, more amount of DB reads and write that could ever happen in a extrinsic.

-

Primary goal is to keep the runtime safe.

-

Secondary goal is to be as accurate as possible to maximize throughput.

-

For non-hard deadline code use metering.

---v

Takeaways

- Benchmarking ensures that parachain’s users are not using resources beyond what is available and expected for our network.

- Weight is used to track consumption of limited blockchain resources based on Execution Time (Reference Hardware) and Size of Data required to create a Merkle Proof.

- 1 second of compute on different computers allows for different amounts of computation.

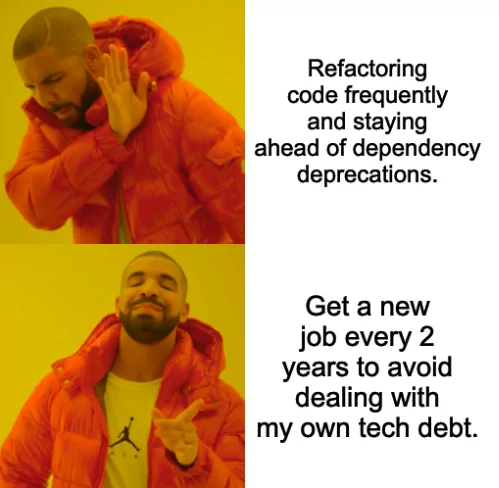

Outdated Crates

---v

Challenge

Dependencies can become a nightmare!

- Using outdated or known vulnerable components, such as pallets or libraries, in a Substrate runtime can expose the system to broad range of security risks and exploits.

---v

Risk

- Exposure to known vulnerabilities that could be exploited by attackers.

- Compromised network integrity and security, leading to potential data breaches or financial loss.

---v

Case Study - Serde Precompiled Binary

- Polkadot uses

serdewith thederivefeature as a dependency. - Issue: Serde developers decided to ships it as a precompiled binary. Article.

- Mitigation: Dependency was fixed to a version that doesn't include the precompiled binary.

A trustless system, such as Polkadot, can't blindly trust binaries.

---v

Mitigation

- Always uses the latest stable version of Polkadot, Substrate, Cumulus, and any other third party crate.

- If possible, avoid using too many crates.

- Use tools such as

cargo auditorcargo vetto monitor the state of your system’s dependencies. - Don't use dependencies that include precompiled binaries.

---v

Takeaways

- Outdated crates can lead to vulnerabilities in your system even if the crate don’t have a vulnerability.

- Outdated crates can contain known vulnerabilities that could be easily exploited in your system.

- Don’t use the latest version of a crate (in production) until it is declared as stable.

XCM Misconfiguration

---v

Challenge

Configuring correctly XCM needs a lot of attention!

- XCM needs to be configured through different pallets and configs.

- Determining the access control to the XCM pallet and the incoming queues needs to be done carefully.

- For new parachains, it is difficult to determine which XCM messages are needed and which are not.

- If the config is not correctly setup the chain could be vulnerable to attacks, become spam targets if incoming XCM messages are not handled as untrusted and/or sanitized properly, or even be used as a bridge to attack other parachains by not enforcing a good Access Control in send operations.

---v

Risk

- Unauthorized manipulation of the blockchain state, compromising the network's integrity.

- Execution of unauthorized transactions, leading to potential financial loss.

- Be used as an attack channel to parachains.

---v

Case Study - Rococo Bridge Hub - Description

- The

MessageExportertype (BridgeHubRococoOrBridgeHubWococoSwitchExporter) in the Rococo's Bridge Hub XcmConfig was using theunimplemented!()macro invalidateanddelivermethods, what is equivalent to thepanic!()macro. This was exposing the Rococo Bridge Hub runtime to non-skippable panic reachable by any parachain allowed to send messages to the Rococo Bridge Hub. - This issue was trivial to execute for anyone that can send messages to the Bridge Hub, as the only needed is to send a valid XCM message that includes an ExportMessage instruction trying to bridge to a network that is not implemented.

---v

Case Study - Rococo Bridge Hub - Issue

---v

Case Study - Rococo Bridge Hub - XCM Config

---v

Mitigation

- Constantly, verify all your configs and compare with other chains.

- In XMC pallet, limit usage execute and send until XCM security guarantees can be ensured.

- In XCM executor, ensure a correct access control in your

XcmConfig:- Only trusted sources can be allowed. Filter origins with

OriginConverter. - Only the specific messages structures your parachain needs to receive can be accepted. Filter message structures with

Barrier(general),SafeCallFilter(transact),IsReserve(reserve),IsTeleporter(teleport),MessageExporter(export),XcmSender(send), etc.

- Only trusted sources can be allowed. Filter origins with

- In XCMP queue, ensure only trusted channels are open.

---v

Takeaways

- XCM development and audits are still going on, so its security guarantees can be ensured at the moment.

- Allowing any user to use execute and send can have a serious impact on your parachain.

- Incoming XCMs need to be handled as untrusted and sanitized properly.

- Insufficient Access Control in your parachain can enable bad actors to attack other parachains through your system.

Unsafe Math

---v

Challenge

- Unsafe math operations in the codebase can lead to integer overflows/underflows, divisions by zero, conversion truncation/overflow and incorrect end results which can be exploited by attackers to manipulate calculations and gain an unfair advantage.

- This involve mainly the usage of primitive arithmetic operations.

---v

Risk

- Manipulation of account balances, leading to unauthorized transfers or artificial inflation of balances.

- Potential disruption of network functionalities that rely on accurate arithmetic calculations.

- Incorrect calculations, leading to unintended consequences like incorrect account balances or transaction fees..

- Potential for attackers to exploit the vulnerability to manipulate outcomes in their favor.

---v

Case Study - Frontier Balances - Description

- Frontier's CVE-2022-31111 disclosure describes the issues found in the process of converting balances from EVM to Substrate, where the pallet didn't handle it right, causing the transferred amount to appear differently leading to a possible overflow.

- This is risky for two reasons:

- It could lead to wrong calculations, like messed-up account balances.

- People with bad intentions could use this error to get unfair advantages.

- To fix this, it's important to double-check how these conversions are done to make sure the numbers are accurate.

---v

Case Study - Frontier Balances - Issue

---v

Case Study - Frontier Balances - Mitigation

---v

Mitigation

-

Arithmetic

-

Simple solution but (sometimes) more costly

Use checked/saturated functions like

checked_div. -

Complex solution but (sometimes) less costly

Validate before executing primitive functions. For example: balance > transfer_amount

-

-

Conversions

- Avoid downcasting values. Otherwise, use methods like

unique_saturated_intoinstead of methods likelow_u64. - Your system should be designed to avoid downcasting!

- Avoid downcasting values. Otherwise, use methods like

---v

Takeaways

- While testing pallets, system will panic (crash) if primitive arithmetic operation leads to overflow/underflow or division by zero. However, on release (production), pallets will not panic on overflow. Always ensure no unexpected overflow/underflow can happen.

- Checked operations use slightly more computational power than primitive operations.

- While testing pallets, system will panic (crash) if conversion leads to overflow/underflow or truncation. However, on release (production), pallets will not panic. Always ensure no unexpected overflow/underflow or truncation can happen.

- In a conversion, smaller the new type, more chances a overflow/underflow or truncation can happen.

Replay Issues

---v

Challenge

- Replay issues, most commonly arising from unsigned extrinsics, can lead to spamming and, in certain scenarios, double-spending attacks.

- This happens when nonces are not managed correctly, making it possible for transactions to be replayed.

---v

Risk

- Spamming the network with repeated transactions, leading to congestion and reduced performance.

- Potential for double-spending attacks, which can compromise the integrity of the blockchain.

---v

Case Study - Frontier STF - Description

- CVE-2021-41138 describes the security issues arose from the changes made in Frontier #482. Before this update, the function

validate_unsignedwas used to check if a transaction was valid. This function was part of the State Transition Function (STF), which is important when a block is being made. After the update, a new function validate_self_contained does the job but it's not part of the STF. This means a malicious validator could submit invalid transactions, and even reuse transactions from a different chain. - In the following sample from Frontier is possible to observe how the

do_transactfunction was used before the update, where thevalidate_self_containedwas not used. - In a later commit, this is patch by adding the validations on block production. Between the changes can be observe that replace of

do_transactforvalidate_transaction_in_blockthat contains the logic to validate a transaction that was previously invalidate_self_contained.

---v

Case Study - Frontier Balances - Issue

---v

Case Study - Frontier Balances - Mitigation

---v

Mitigation

- Ensure that the data your system is receiving from untrustworthy sources:

- Can’t be re-used by implementing a nonces mechanism.

- Is intended for your system by checking any identification type like ID, hashes, etc.

---v

Takeaways

- Replay issues can lead to serious damage.

- Even if the chain ensure a runtime transaction can’t be replayed, external actors could replay a similar output by passing similar inputs if the they are not correctly verified.

Unbounded Decoding

---v

Challenge

- Decoding objects without a nesting depth limit can lead to stack exhaustion, making it possible for attackers to craft highly nested objects that cause a stack overflow.

- This can be exploited to disrupt the normal functioning of the blockchain network.

---v

Risk

- Stack exhaustion, which can lead to network instability and crashes.

- Potential for Denial of Service (DoS) attacks by exploiting the stack overflow vulnerability.

---v

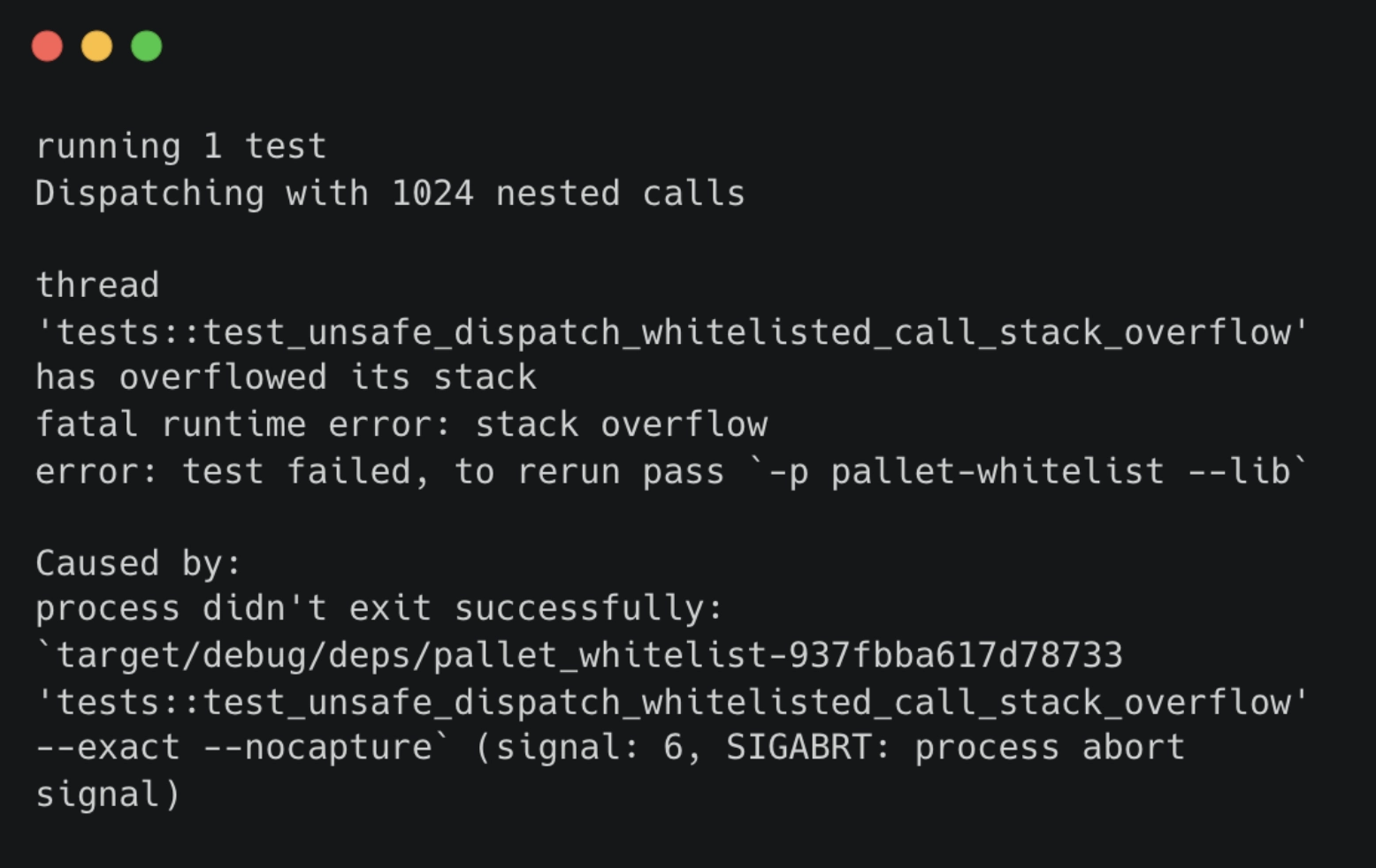

Case Study - Whitelist Pallet - Description

- In Substrate #10159, the

whitelist-palletwas introduced. This pallet contains the extrinsicdispatch_whitelisted_callthat allow to dispatch a previously whitelisted call. - In order to be dispatched, the call needs to be decoded, and this was being done with the decode method.

- Auditors detected this method could lead to an stack overflow and suggested the developers to use decode_with_depth_limit to mitigate the issue.

- Risk was limited due to the origin had specific restrictions, but if the issue is triggered, the resulting stack overflow could cause a whole block to be invalid and the chain could get stuck failing to produce new blocks.

---v

Case Study - Whitelist Pallet - Issue

---v

Case Study - Whitelist Pallet - Exploit PoC

---v

Case Study - Whitelist Pallet - Exploit Results

---v

Case Study - Whitelist Pallet - Mitigation

---v

Mitigation

- Use the

decode_with_depth_limitmethod instead ofdecodemethod. - Use

decode_with_depth_limitwith a depth limit lower than the depth that can cause an stack overflow.

---v

Takeaways

- Decoding untrusted objects can lead to stack overflow.

- Stack overflow can lead to network instability and crashes.

- Always ensure a maximum depth while decoding data in a pallet.

Verbosity Issues

---v

Challenge

- Lack of detailed logs from collators, nodes, or RPC can make it difficult to diagnose issues, especially in cases of crashes or network halts.

- This lack of verbosity can hinder efforts to maintain system health and resolve issues promptly.

---v

Risk

- Difficulty in diagnosing and resolving system issues, leading to extended downtime.

- Reduced ability to identify and mitigate security threats compromising network integrity.

---v

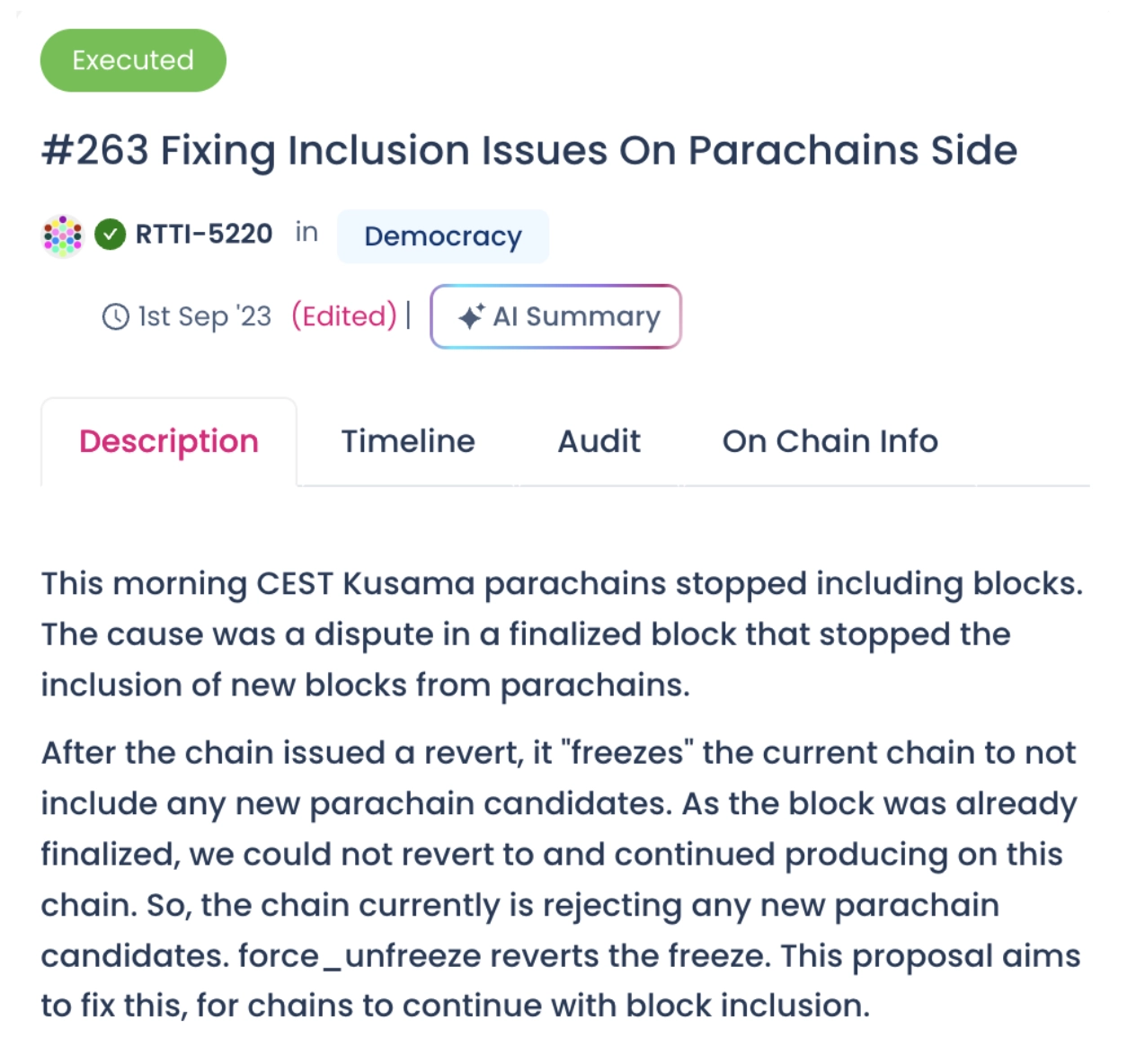

Case Study

- During recent Kusama issue, the chain stopped block production for some hours.

- Engineers needed to check all the logs to understand what caused it or what triggered the incident.

- Logging system allowed them to detect that the cause was a dispute in a finalized block.

- Consensus systems are complex and almost never halt, but when they do, it is difficult to recreate the scenario that led to it.

- A good logging system can therefore help to reduce downtime.

---v

Mitigation

- Regularly review logs to identify any suspicious activity, and determine if there is sufficient verbosity.

- Implement logs in the critical parts of your pallets.

- Implement dashboards to detect anomaly patterns in logs and metrics. A great example is Grafana that is used by some node maintainers to be aware of recent issues.

---v

Takeaways

- Logs are extremely important to diagnose and resolve system issues.

- Insufficient verbosity can lead to extended downtime.

Inconsistent Error Handling

---v

Challenge

- Errors/exceptions need to be handled consistently to avoid attack vectors in critical parts of the system.

- While processing a collection of items, if one of them fails, the whole batch fails. This can be exploited by an attacker that wants to block the execution. This can become a critical problem if the processing is happening in a privileged extrinsic like a hook.

---v

Risk

- Privileged extrinsics Denial of Service (DoS).

- Unexpected behavior in the system.

---v

Case Study - Decode Concatenated Data - Issue

---v

Case Study - Decode Concatenated Data - Issue

---v

Mitigation

- Verify the error handling is consistent with the extrinsic logic.

- During batch processing

-

If all items need to be processed always

Propagate directly the error to stop the batch processing.

-

If only some items need to be processed

Handle the error and continue the batch processing.

-

---v

Takeaways

- Ensure error handling.

- Optimize your batch processing to handle errors instead of losing execution time.