Course Materials Book

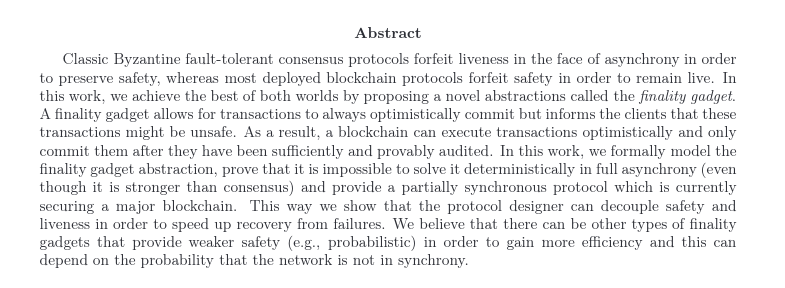

This book is the home of the majority of materials used within the Core Developer Track of the Polkadot Blockchain Academy.

Read the Book

We suggest the online version for general use, but cloning, installing, and building this book offline is a great option on-the-go.

Hosted Online

The latest version is hosted at: https://polkadot-blockchain-academy.github.io/pba-book/

Build Offline

The Core Developer Track of the Academy is Rust heavy and as such, you need to install rust before you can continue.

In order to make your life easy 😉, there is a set of tasks that use cargo make.

With cargo make installed, you can list all tasks included to facilitate further installation, building, serving, formatting, and more with:

# Run from the top-level working dir of this repo

makers --list-all-steps

The tasks should be self-explanatory, if they are not - please file an issue to help us make them better.

License and Use Policies

All materials found within this repository are licensed under Mozilla Public License Version 2.0 - See the License for details.

In addition to the license, we ask you read and respect the Academy's Code of Conduct and help us foster a healthy and scholarly community of high academic integrity.

Learn more about and apply for the next Academy today!

🪄 Using this Book

This book contains all the Academy's content in sequence.

It is intended to read from start to finish, in order, and following the index.md page for each module.

📔 How to use mdBook

This book is made with mdBook - a Rust native documentation tool. Please give the official docs there a read to see what nice features are included. To name a few key items:

| Icon | Description |

|---|---|

| Opens and closes the chapter listing sidebar. | |

| Opens a picker to choose a different color theme. | |

| Opens a search bar for searching within the book. | |

| Instructs the web browser to print the entire book. | |

| Opens a link to the website that hosts the source code of the book. | |

| Opens a page to directly edit the source of the page you are currently reading. |

Search

Pressing the search icon () in the menu bar, or pressing the S key on the keyboard will open an input box for entering search terms.

Typing some terms will show matching chapters and sections in real time.

Clicking any of the results will jump to that section. The up and down arrow keys can be used to navigate the results, and enter will open the highlighted section.

After loading a search result, the matching search terms will be highlighted in the text.

Clicking a highlighted word or pressing the Esc key will remove the highlighting.

🎞️ How-to use reveal.js Slides

Most pages include embedded slides that have a lot of handy features.

These are with reveal-md: a tool built with reveal.js to allow for Markdown only slides, with a few extra syntax items to make your slides look and feel awesome with very little effort.

📝 Be use to have the slides

iframeon a page active (🖱️ click on it) to use slide keybindings... Otherwise those are captured by themdbooktools! (sis search for the book, and speaker notes for the slides)

Be a power user of these by using the keybindings to interact with them:

- Use

spaceto navigate all slides: top to bottom, left to right.- Use

down/uparrow keys to navigate vertical slides. - Use

left/rightarrow keys to navigate horizontal slides.

- Use

- Press

Escoroto see anoverviewview that arrow keys can navigate. - Press

sto open up speaker view.

👀 Speaker notes include very important information, not to be missed!

💫 Slides Demo

Tryout those keybindings (🖱️ click on the slides to start) below:

(🖱️ expand) Raw Markdown of Slides Content

--- title: How-to use Reveal.js Slides description: How to use reveal.js duration: 5 minuets ---How-to use Reveal.js Slides

These slides are built with reveal.js.

These slides serve as a feature demo of reveal for you! 🎉

What are we going to see:

-

How to use Reveal.js Features

- Useful

reveal.jstips - Speaker Notes

- Useful

How to use Reveal.js Features

Press the down/up keys to navigate _vertical slides_

Try doing down a slide.

---v

Use the keybindings!

-

Overview mode: “O” to see a birds-eye view of your presentation, “ESC” to return to the highlighted slide (you can quickly navigate with arrows)

-

Full-screen: “F”, “ESC” to exit full-screen mode

-

Speaker mode: “S” it synchronizes 2 windows: one with the presentation, and another with a timer and all speaker notes!

-

Zoom-in: ALT+click make the view zoom at the position of your mouse’s pointer; very useful to look closely at a picture or chart surrounded by too much bullet points.

---v

Speaker Notes & Viewer

Press the s key to bring up a popup window with speaker view

You need to unblock popups to have the window open

Notes:

This is a note just for you. Set under a line in your slide starting with "Note:" all

subsequent lines are just seen in speaker view.

Enjoy!

😎

☝️ All slides Slides Content is available on all pages.

This enables search to work throughout this book to jump-to-location for any keywords you remember related to something covered in a lesson 🚀.

📖 Learn More

📒 Book Overview

This book contains a set of course materials covering both the conceptual underpinnings and hands-on experience in developing blockchain and web3 technologies. Students will be introduced to core concepts in economic, cryptographic, and computer science fields that lay the foundation for approaching web3 development, as well as hands-on experience developing web3 systems in Rust, primarily utilizing the ecosystem of tooling provided by Polkadot and Substrate.

🙋 This book is designed specifically for use in an in-person course. This provides far more value from these materials than an online only, self-guided experience could provide.

✅ The Academy encourages everyone to apply to the program Our program is facilitated a few times a year at prestigious places around the world, with on the order of ~50-100 students per cohort.

👨🎓 Learning Outcomes

By the end of the Polkadot Blockchain Academy, students will be able to:

- Apply economic, cryptographic, and computer science concepts to web3 application design

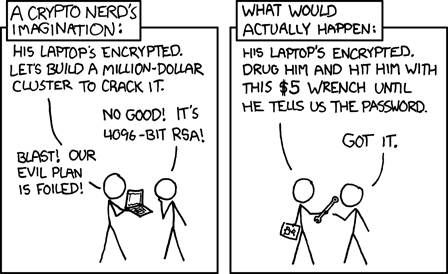

- Robustly design and evaluate security of web3, both at the protocol and user application level

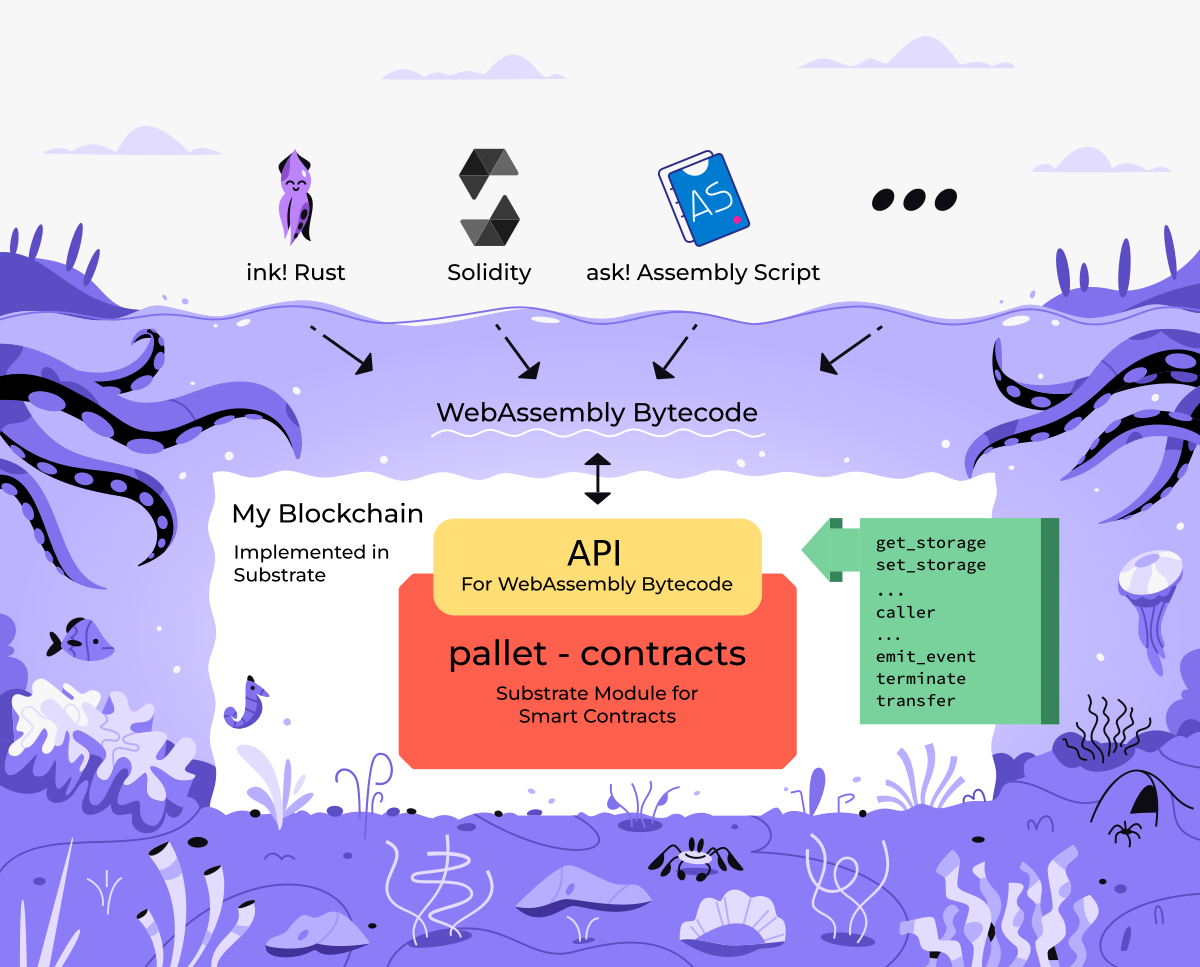

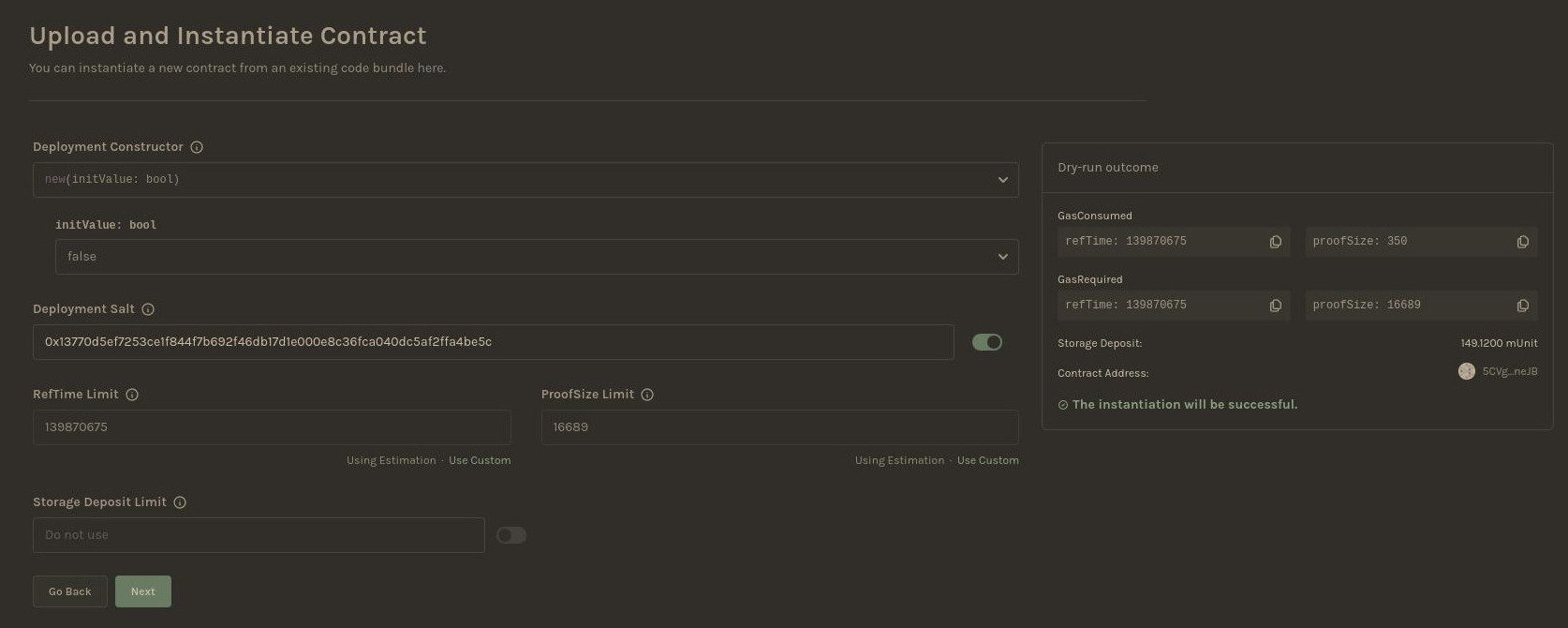

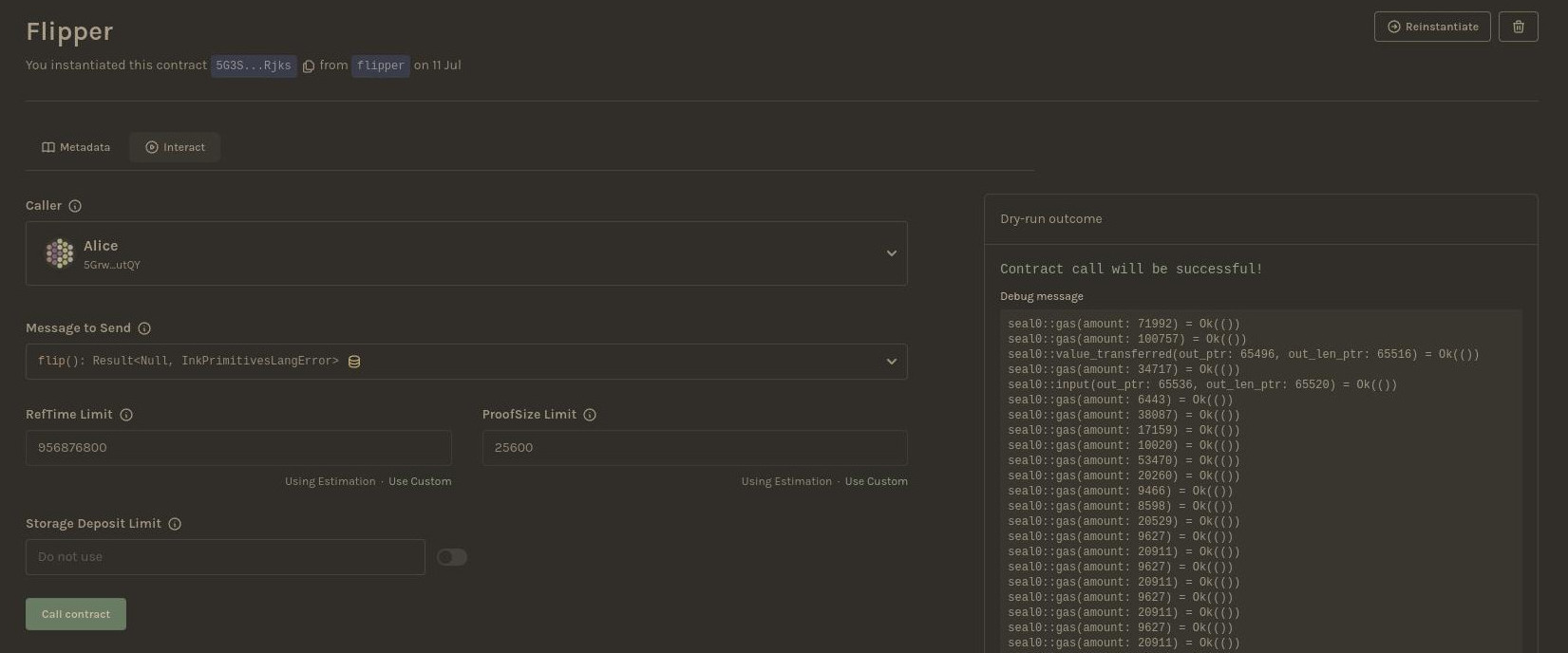

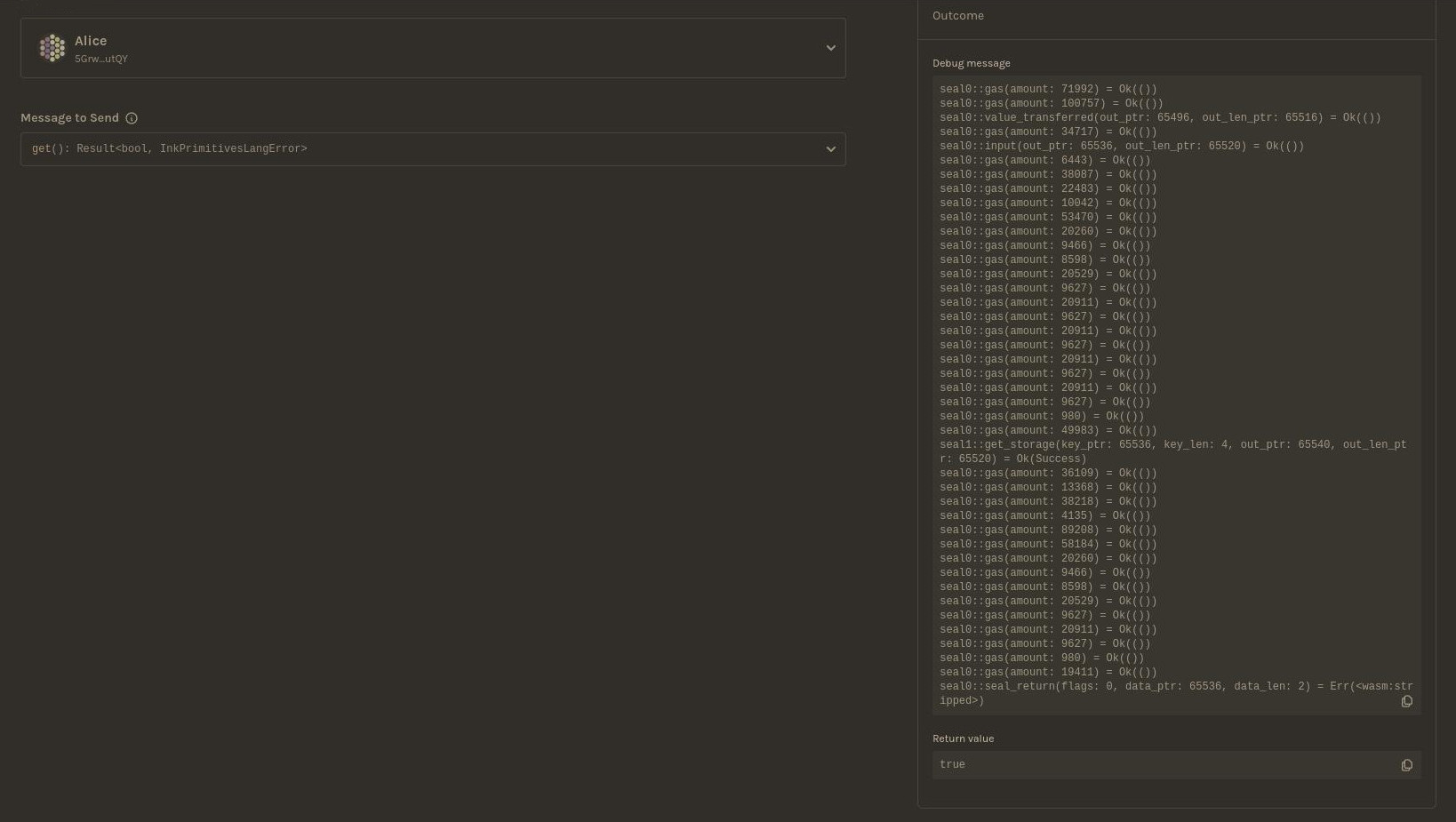

- Write a smart contract using one of a number of languages and deploy it to a blockchain

- Implement a Substrate based blockchain

- Deploy a parachain utilizing Substrate, Cumulus, and Polkadot

- Employ FRAME to accelerate blockchain and parachain development

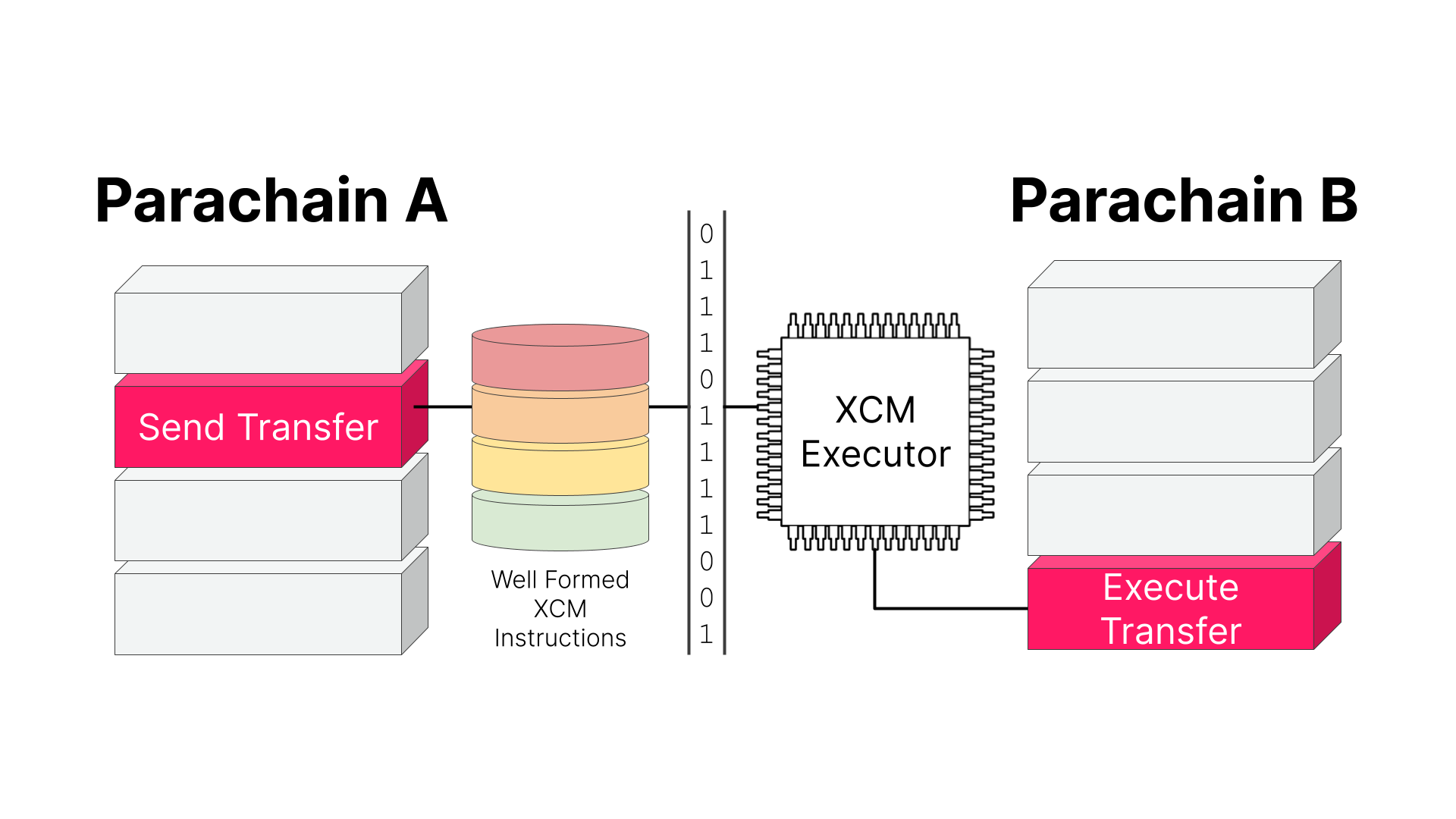

- Configure XCM for cross-consensus messaging between parachains

🖋️ Nomenclature

The academy uses explicit terms to describe materials use within as content categories defined here:

- Lesson: a segment of content (1-2 hours) that is one of:

- Lecture: An oral presentation that consists primarily of slide based content.

Most content in this book is of this type.

- Exercise: a short (5-10 minutes) exercise for to be completed during a lecture (code snippets, mini-demos, etc.).

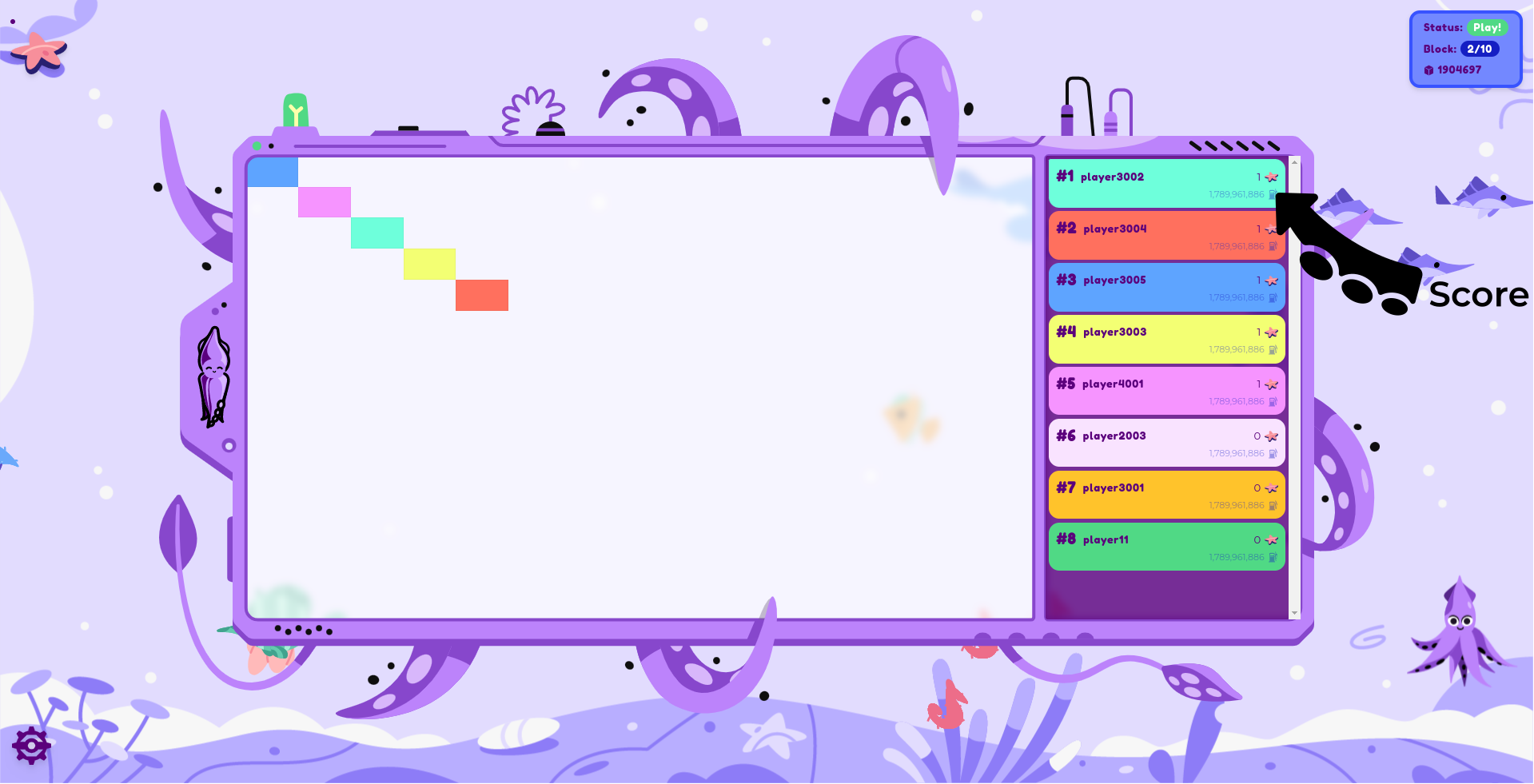

- Workshop: these are step-by-step, longer (0.5-3 hours) guided in-class material (live-coding, competitions, games, etc.). Workshops are instructor lead, and hand-held to get everyone to the same result.

- Activity: these are self-directed activities for individuals and/or small groups. Activities are not guided or "hand-held" by the instructor like workshops are.

- Lecture: An oral presentation that consists primarily of slide based content.

Most content in this book is of this type.

- Assignment: a graded piece of work, typically one per week is assigned.

- Assignments are not public - these are only accessible by Academy Faculty, Staff, and (in a derivative form) Students.

🪜 Course Sequence

The course is segmented into modules, with the granular lessons intended to be completed in the sequence provided in the left-side navigation bar.

| Module | Topic |

|---|---|

| 🔐 Cryptography | Applied cryptography concepts and introduction to many common tools of the trade for web3 builders. |

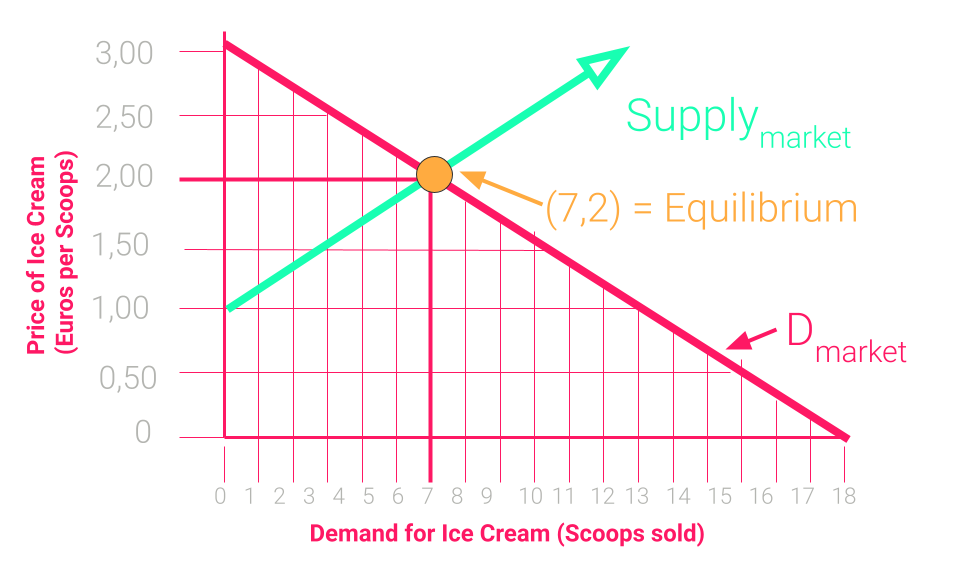

| 🪙 Economics and Game Theory | Applied economics and game theory fundamental to the architecture and operation of web3 applications. |

| ⛓️ Blockchains and Smart Contracts | Blockchain and applications built on them covered in depth conceptually and hands-on operation and construction. |

| 🧬 Substrate | The blockchain framework canonical to Polkadot and Parachains covered in depth, at a lower level. |

| 🧱 FRAME | The primary Substrate runtime framework used for parachain development. |

| 🟣 Polkadot | The Polkadot blockchain covered in depth, focus on high-level design and practically how to utilize its blockspace. |

| 💱 XCM | The cross consensus messaging format covered from first principals to use in protocols. |

The lessons include materials used, with links and instructions to required external materials as needed.1

Notably, the graded assignments for the Academy and some solutions to public activities and exercises remain closed source, and links are intentionally left out of this book. These materials may be shared as needed with students in person during the Academy.

🔐 Cryptography

“Cryptography rearranges power: it configures who can do what, from what”

Phillip Rogaway, The Moral Character of Cryptographic Work

Applied cryptography concepts and introduction to many common tools of the trade for web3 builders.

Introduction to Cryptography

How to use the slides - Full screen (new tab)

Introduction to Cryptography

Some Useful Equations

Notes:

Just kidding!

Goals for this lesson

- Understand the goals of cryptography

- Understand some network and contextual assumptions

- Learn what expectations cryptography upholds

- Learn the primitives

Notes:

In this first lesson,

Cryptography Landscape

Notes:

What is covered in this course is all connected subjects. We will not cover any details for hybrid or interactive protocols in the course.

Operating Context

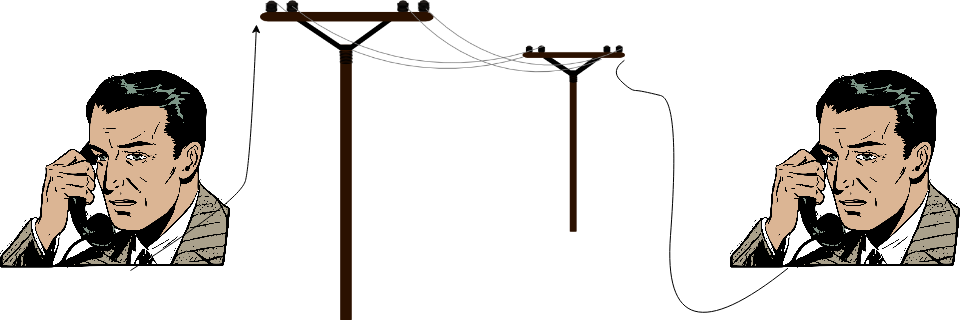

The internet is a public space.

We communicate over public channels. Adversaries may want to:

- Read messages not intended for them

- Impersonate others

- Tamper with messages

Notes:

Use e-mail as an example of an flawed system.

Some examples include:

- An attacker may impersonate your boss, trying to get you to send them money

- An attacker may change a message sent over a network, e.g. an instruction to transfer 100 EUR to 10000 EUR

Probably best for the teacher to ask students to participate with examples of application messages, not just person-to-person messages.

Operating Context

Resources are constrained.

- Network, storage, computation, etc.: We don't want to send, store, or operate on the same data, but we want guarantees about it, e.g. that we agree on a message's contents.

- Privacy: We must assume that all channels can be monitored, and thus closed channels are heavily constrained (i.e. assumed to not exist).

Open vs. Closed Channels

Cryptography based on public systems is more sound.

Kerckhoff's Principle: Security should not rely on secret methods,

but rather on secret information.

Notes:

There is no such thing as a "closed channel" :)

- Methods can be reverse engineered. After that, the communication channel is completely insecure. For example, CSS protection for DVDs.

- We always work with public, open protocols.

Cryptographic Guarantees*

- Data confidentiality

- Data authenticity

- Data integrity

- Non-repudiation

Notes:

Cryptography is one of the (most important) tools we have to build tools that are guaranteed to work correctly. This is regardless of who (human, machine, or otherwise) is using them and their intentions (good or bad).

Why an asterisk? There generally are no perfect & absolute guarantees here, but for most practical purposes the bounds on where these fail are good enough to serve our needs as engineers and users. Do note the assumptions and monitor their validity over time (like quantum tech).

Important Non-Guarantee

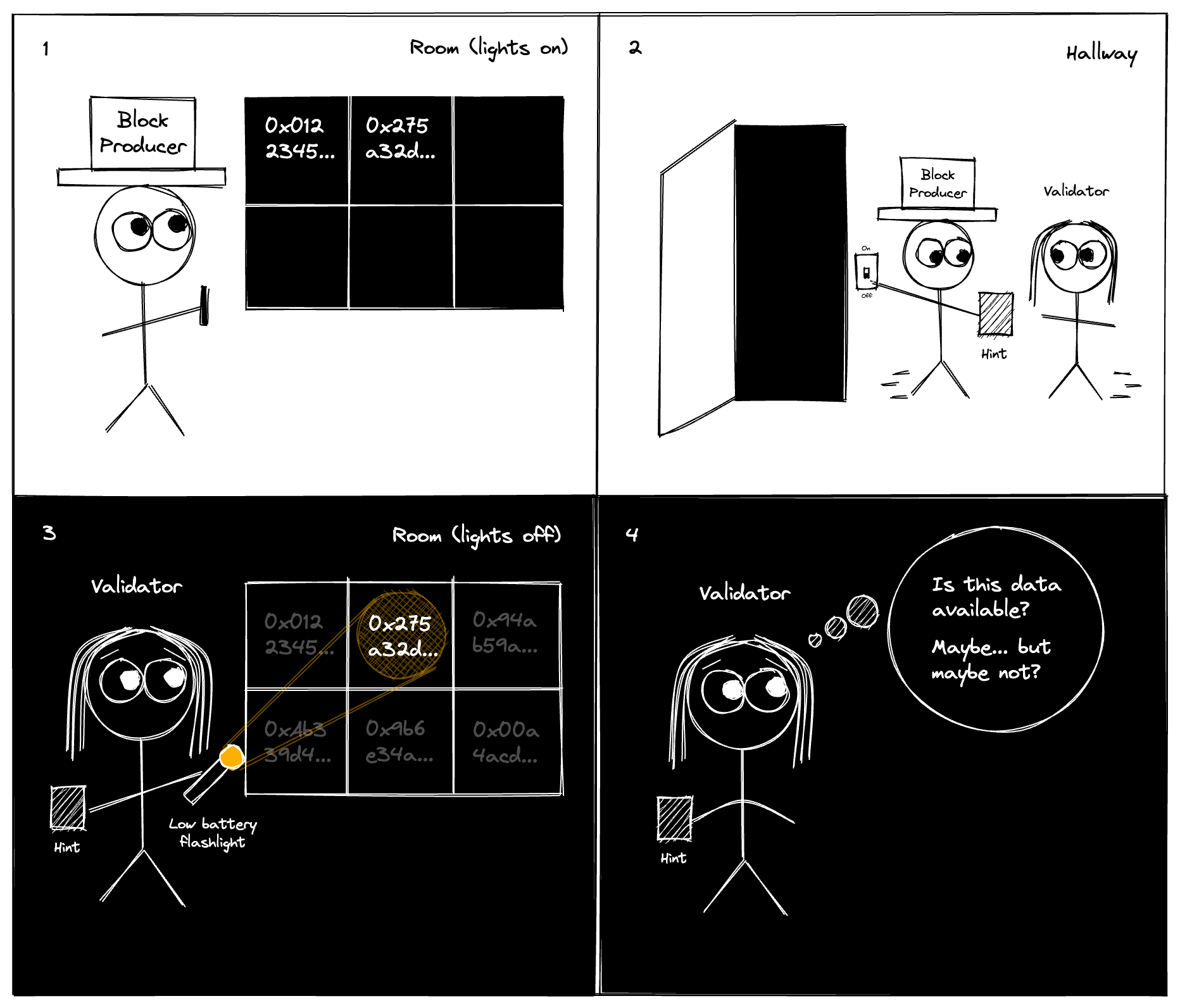

- Data availability

Cryptography alone cannot make strong guarantees that data is available to people when they want to access it.

Notes:

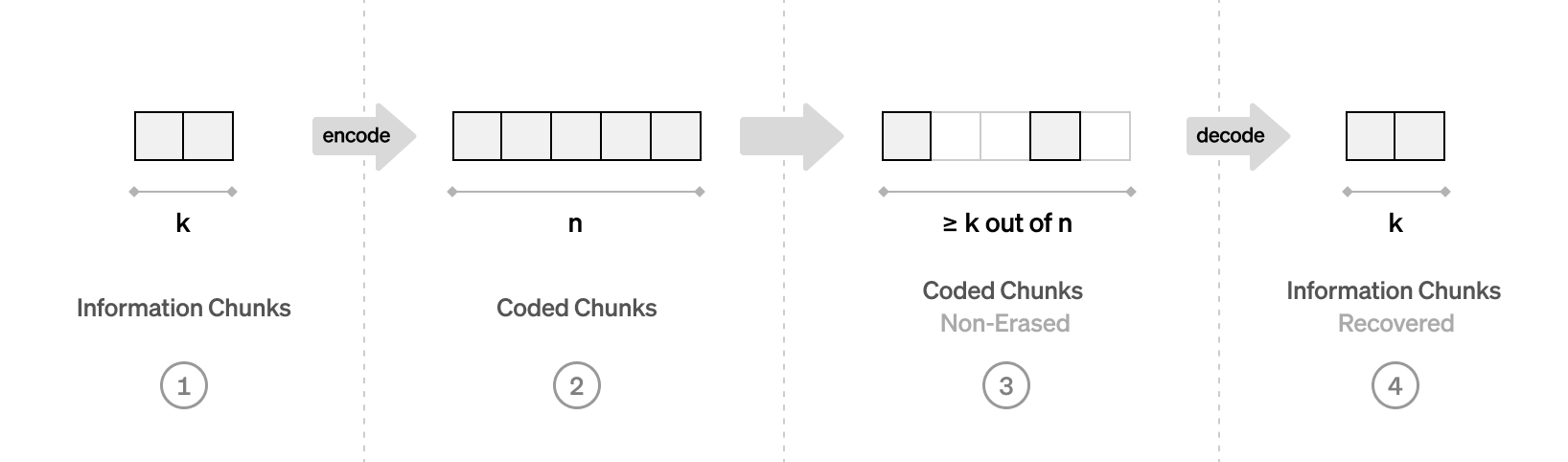

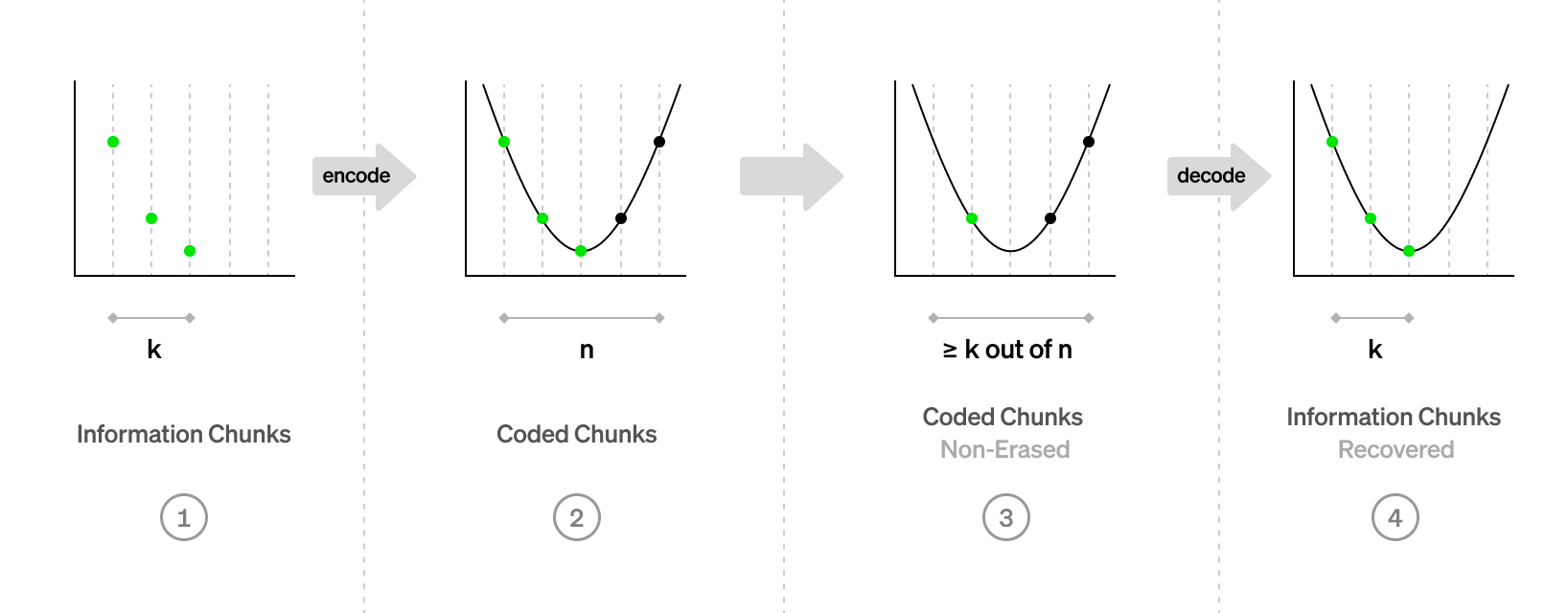

There are many schemes to get around this, and this topic will come up later in the course. We will touch on erasure coding, which makes data availability more efficient.

Data Confidentiality

A party may gain access to information

if and only if they know some secret (a key).

Confidentiality ensures that a third party cannot read my confidential data.

Notes:

The ability to decrypt some data and reveal its underlying information directly implies knowledge of some secret, potentially unknown to the originator of the information. Supplying the original information (aka plain text message) can be used in a "challenge game" mechanism as one means of proving knowledge of the secret without compromising it.

Mention use of the term "plaintext".

Allegory: A private document stored on server where sysadmin has access can be subpoenaed, violating assumed Attorney-Client Privilege on the document.

---v

Confidentiality in Communication Channels

Suppose Alice and Bob are sending confidential messages back and forth. There are some subtypes of confidentiality here:

- Forward Secrecy: Even if an adversary temporarily learns Alice's secret, it cannot read future messages after some point.

- Backwards Secrecy: Even if an adversary temporarily learns Alice's secret, it cannot read past messages beyond some previous point.

Data Authenticity

Users can have the credible expectation that the stated origin of a message is authentic.

Authenticity ensures that a third party cannot pretend I created some data.

Notes:

- Digital signatures should be difficult (practically speaking: impossible) to forge.

- Digital signatures should verify that the signer knows some secret, without revealing the secret itself.

Data Integrity

If data is tampered with, it is detectable. In other words, it possible to check if the current state of some data is the consistent with when it was created.

Integrity ensures that if data I create is corrupted, it can be detected.

---v

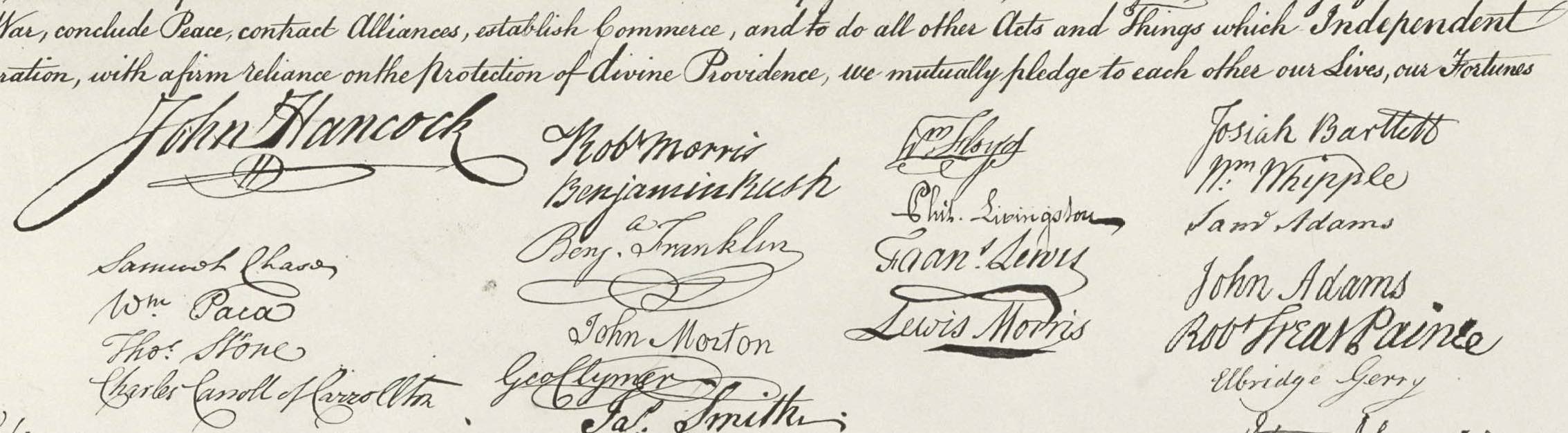

Physical Signatures

Physical signatures provide weak authenticity guarantees

(i.e. they are quite easy to forge), and no integrity guarantees.

---v

An Ideal Signature

Notes:

For example, if you change the year on your university diploma, the dean's signature is still valid. Digital signatures provide a guarantee that the signed information has not been tampered with.

Non-repudiation

The sender of a message cannot deny that they sent it.

Non-repudiation ensures if Bob sends me some data, I can prove to a third party that they sent it.

One-Way Functions

One-way functions form the basis of both

(cryptographic) hashing and asymmetric cryptography. A function $f$ is one way if:

- it is reasonably fast to compute

- it is very, very slow to undo

Notes:

There are a lot of assumptions about why these functions are hard to invert, but we cannot rigorously prove it. We often express inversion problems in terms of mathematical games or oracles.

Hash Functions

Motivation: We often want a succinct, yet unique representation of some (potentially large) data.

A fingerprint, which is much smaller than a person, yet uniquely identifies an individual.

Notes:

The following slides serve as an intro. Many terms may be glossed over, and covered in detail later. There are lessons later in this module dedicated to hashes and hash-based data structures.

---v

Hash Function Applications

Hashes can be useful for many applications:

- Representation of larger data object

(history, commitment, file) - Keys in a database

- Digital signatures

- Key derivation

- Pseudorandom functions

Symmetric Cryptography

Symmetric encryption assumes all parties begin with some shared secret information, a potentially very difficult requirement.

The shared secret can then be used to protect further communications from others who do not know this secret.

In essence, it gives a way of extending a shared secret over time.

Notes:

Remember that these communications are over an open channel, as we assumed that all channels can be monitored.

Symmetric Encryption

For example, the Enigma cipher in WW2. A channel was initiated by sharing a secret ("key") between two participants. Using the cipher, those participants could then exchange information securely.

However, since the key contained only limited entropy ("information"), enough usage of it eventually compromised the secret and allowed the allies to decode messages. Even altering it once per day was not enough.

Notes:

When communicating over a channel that is protected with only a certain amount of entropy, it is still possible to extend messages basically indefinitely by introducing new entropy that is used to protect the channel sufficiently often.

Asymmetric Cryptography

-

In asymmetric cryptography, we devise a means to transform one value (the "secret") into some corresponding counterpart (the "public" key), preserving certain properties.

-

We believe that this is a one-way function (that there is no easy/fast inverse of this function).

-

Aside from preserving certain properties, we believe this counterpart (the "public key") reveals no information about the secret.

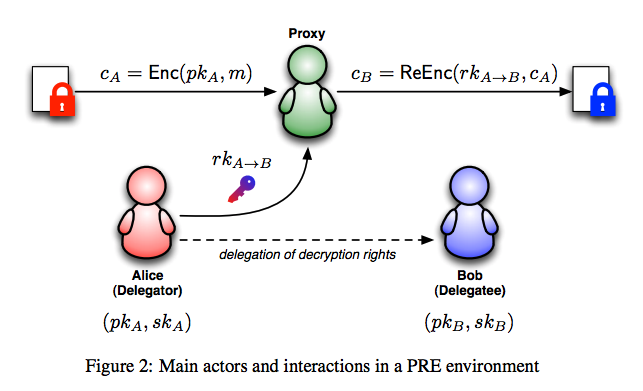

Asymmetric Encryption

Using only the public key, information can be transformed ("encrypted") such that only those with knowledge of the secret are able to inverse and regain the original information.

Digital Signatures

-

Using the secret key, information can be transformed ("signed") such that anyone with knowledge of the information and the counterpart public key is able to affirm the operation.

-

Digital signatures provide message authenticity and integrity guarantees.

-

There are two lessons are dedicated to digital signatures,

this is strictly an intro.

Digital Signatures

Signing function: a function which operates on some

message data and some secret to yield a signature.

A signature proves that the signer had knowledge of the secret,

without revealing the secret itself.

The signature cannot be used to create other signatures, and is unique to the message.

Notes:

A signing function is a pure function which operates on some message data (which may or may not be small, depending on the function) and some secret (a small piece of information known only to the operator). The result of this function is a small piece of data called a signature.

Pure means that it has no side effects.

It has a special property: it proves (beyond reasonable doubt) that the signer (i.e. operator of the signing function) had knowledge of the secret and utilized this knowledge with the specific message data, yet it does not reveal the secret itself, nor can knowledge of the signature be used to create other signatures (e.g. for alternative message data).

Non-repudiation for Crypgraphic Signatures

There is cryptographic proof that the secret was known to the producer of the signature.

The signer cannot claim that the signature was forged, unless they can defend a claim that the secret was compromised prior to signing.

Practical Considerations

Symmetric cryptography is much faster, but requires more setup (key establishment) and trust (someone else knows the secret).

Asymmetric cryptography is slow, but typically preserves specific algebraic relationships, which then permit more diverse if fragile protocols.

Hybrid Cryptography

Hybrid cryptography composes new mechanisms from different cryptographic primitives.

For example:

- Symmetric encryption can provide speed, and often confidentiality,

- Hash functions can reduce the size of data while preserving identity,

- Asymmetric cryptography can dictate relations among the participants.

Certifications

Certifications are used to make attestations about public key relationships.

Typically in the form of a signature on:

- One or more cryptographically strong identifiers (e.g. public keys, hashes).

- Information about its ownership, its use and any other properties that the signer is capable of attesting/authorizing/witnessing.

- (Meta-)information about this information itself, such as how long it is valid for and external considerations which would invalidate it.

Notes:

- Real application is the hierarchy of SSL certs.

- Root keys -> State level entities -> Smaller entities.

- Web of Trust & GPG cross-signing

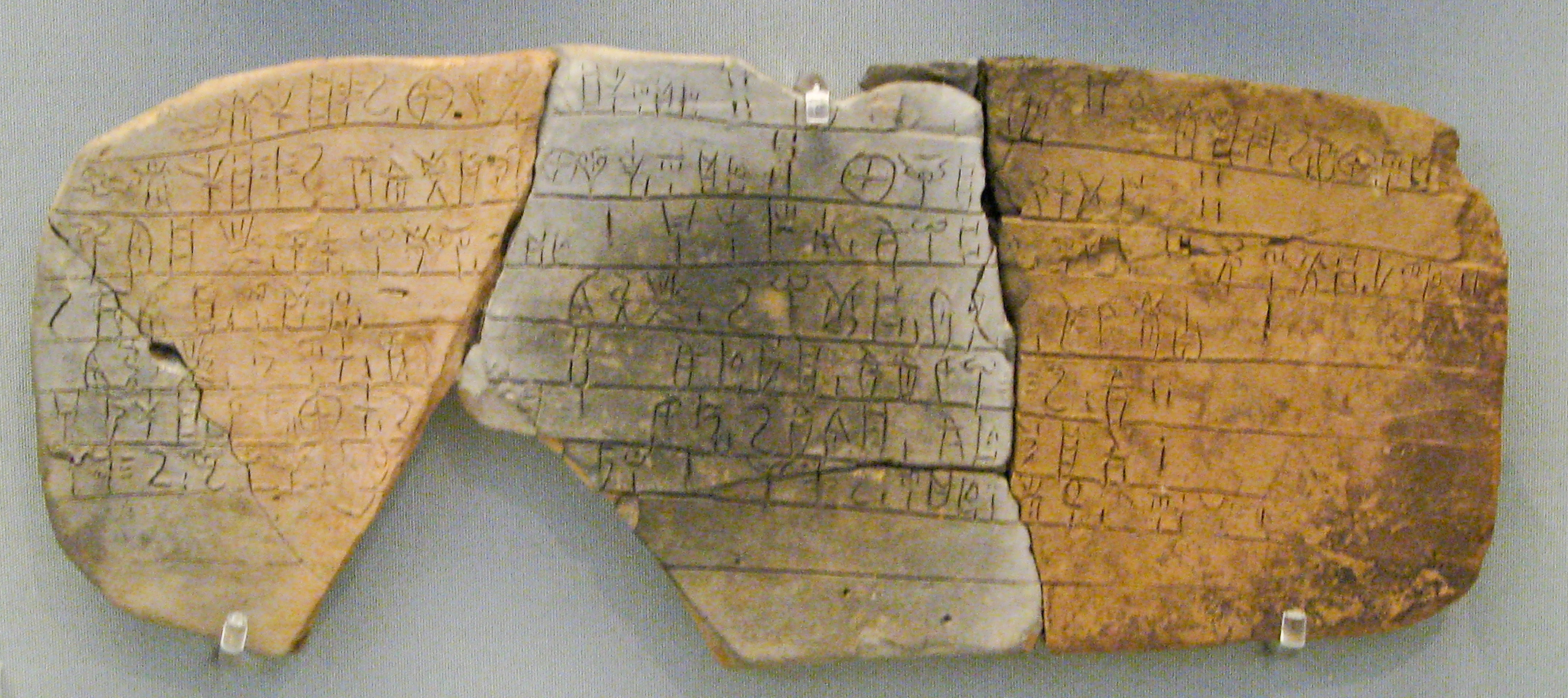

- In the case of signature-based certificates, as long as you have the signature, data, and originating public key, you can trust a certificate no matter where it came from. It could be posted on a public message board, sent to you privately, or etched into stone.

Entropy, Randomness, and Key Size

- Entropy: Amount of non-redundant information contained within some data.

- Randomness: Unpredictability of some information. Less random implies lower entropy.

- Key size: Upper limit of possible entropy contained in a key. Keys with less random (more predictable) data have less entropy than this upper bound.

- One-time pad: A key of effectively infinite size. If it is perfectly random (i.e. has maximal entropy), then the cipher is theoretically unbreakable.

Notes:

Mention the upcoming "many time pad" activity, that exploits using a one time pad multiple times.

Randomness Generation

#![allow(unused)] fn main() { fn roll_die() -> u32 { // Guaranteed random: it was achieved through a real-life die-roll. 4u32 } }

- Pseudo-random sequences

- Physical data collection (e.g. cursor movement, LSB of microphone)

- Specialised hardware (e.g. low-level noise on silicon gates, quantum-amplifiers)

Notes:

LSB := Least Significant Bit

Summary

Cryptography is much more than encryption.

- Communicate on public networks, in the open

- Access information

- Have expectations about a message's authenticity and integrity

- Prove knowledge of some secret information

- Represent large amounts of data succinctly

Questions

What insights did you gain?

Notes:

Addresses and Keys

How to use the slides - Full screen (new tab)

Addresses and Keys

Outline

- Binary Formats

- Seed Creation

- Hierarchical Deterministic Key Derivation

Binary Display Formats

When representing binary data, there are a few different display formats you should be familiar with.

Hex: 0-9, a-f

Base64: A-Z, a-z, 0-9, +, /

Base58: Base64 without 0/O, I/l, +, and /

Notes:

Be very clear that this is a display format that we use to transmit binary data through text. The same data can be encoded with any of these formats, it's just important to know which one you're using to decode. Data is not typically stored in these formats unless it has to be transmitted through text.

Binary Display Formats Example

Every hex character is 4 bits. Every base64 character is 6 bits. base58 characters are usually about 6 bits.

binary: 10011111 00001010 10011110 10011000 01001100 11010011 10110010 00000101

hex: 9 f 0 a 9 e 9 8 4 c d 3 b 2 0 5

base64: n w q e m E z T s g U=

base58: T b u H z e 3 c t k c

hex: 9f0a9e984cd3b205

base64: nwqemEzTsgU=

base58: TbuHze3ctkc

Notes:

It turns out that converting from hex/base64 to base58 can in theory take n^2 time!

Mnemonics and Seed Creation

Notes:

These are all different representation of a secret. Fundamentally doesn't really change anything.

Seeds are secrets

Recall, both symmetric and asymmetric cryptography require a secret.

Mnemonics

Many wallets use a dictionary of words and give people phrases,

often 12 or 24 words, as these are easier to back up/recover than byte arrays.

Notes:

High entropy needed. People are bad at being random. Some people create their own phrases... this is usually stupid.

Dictionaries

There are some standard dictionaries to define which words (and character sets) are included in the generation of a phrase. Substrate uses the dictionary from BIP39.

| No. | word |

|---|---|

| 1 | abandon |

| 2 | ability |

| 3 | able |

| 4 | about |

| 5 | above |

The first 5 words of the BIP39 English dictionary

Mnemonic to Secret Key

Of course, the secret key is a point on an elliptic curve, not a phrase.

BIP39 applies 2,048 rounds of the SHA-512 hash function

to the mnemonic to derive a 64 byte key.

Substrate uses the entropy byte array from the mnemonic.

Portability

Different key derivation functions affect the ability to use the same mnemonic in multiple wallets as different wallets may use different functions to derive the secret from the mnemonic.

Cryptography Types

Generally, you will encounter 3 different modern types of cryptography across most systems you use.

- Ed25519

- Sr25519

- ECDSA

We will go more in depth in future lectures!

Notes:

You may have learned RSA in school. It is outdated now, and requires huge keys.

What is an address?

An address is a representation of a public key, potentially with additional contextual information.

Notes:

Having an address for a symmetric cryptography doesn't actually make any sense, because there is no public information about a symmetric key.

Address Formats

Addresses often include a checksum so that a typo cannot change one valid address to another.

Valid address: 5GEkFD1WxzmfasT7yMUERDprkEueFEDrSojE3ajwxXvfYYaF

Invalid address: 5GEkFD1WxzmfasT7yMUERDprk3ueFEDrSojE3ajwxXvfYYaF

^

E changed to 3

Notes:

It hasn't been covered yet, but some addresses even go extra fancy and include an error correcting code in the address.

SS58 Address Format

SS58 is the format used in Substrate.

It is base58 encoded, and includes a checksum and some context information. Almost always, it is 2 bytes of context and 2 bytes of checksum.

base58Encode( context | public key | checksum )

Notes:

| here stands for concatenation.

For ECDSA, the public key is 33 bytes, so we use the hash of it in place of the public key.

There are a lot more variants here, but this is by far the most common one.

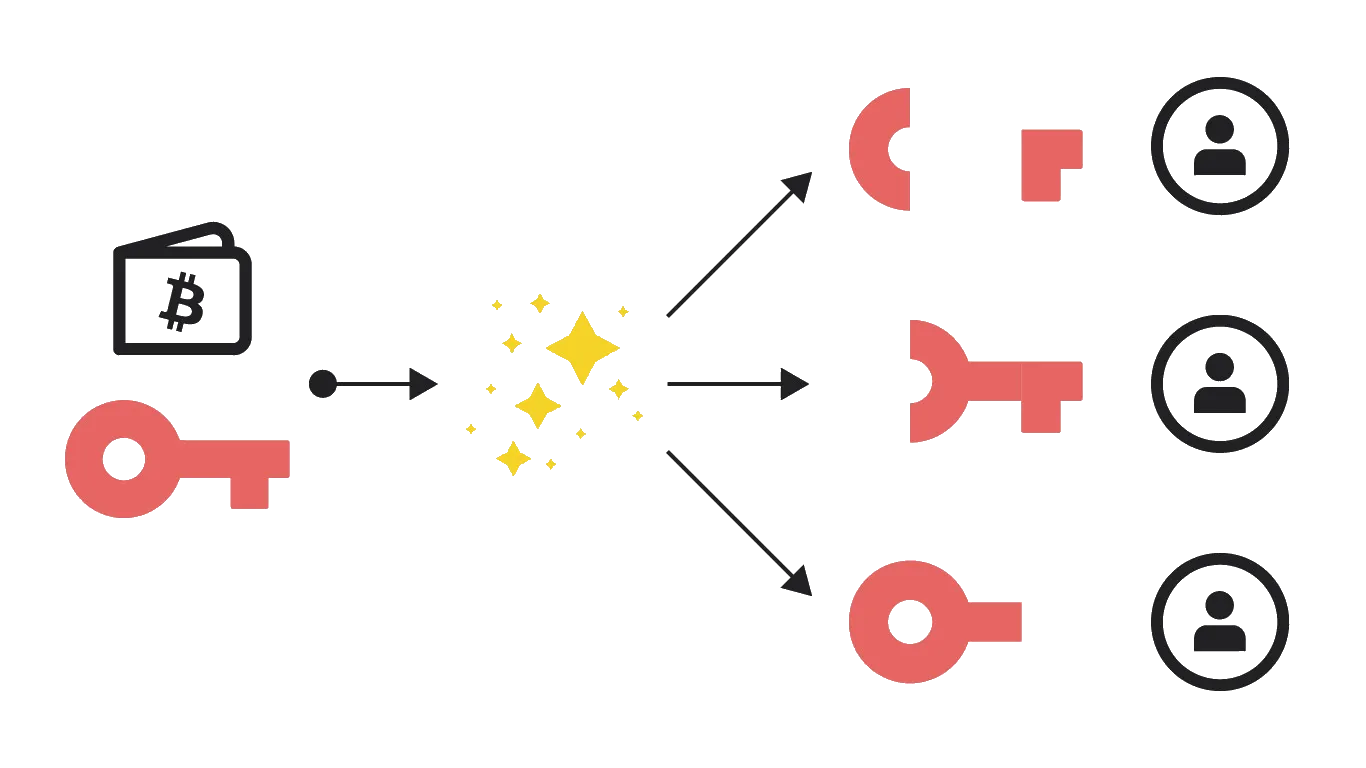

HDKD

Hierarchical Deterministic Key Derivation

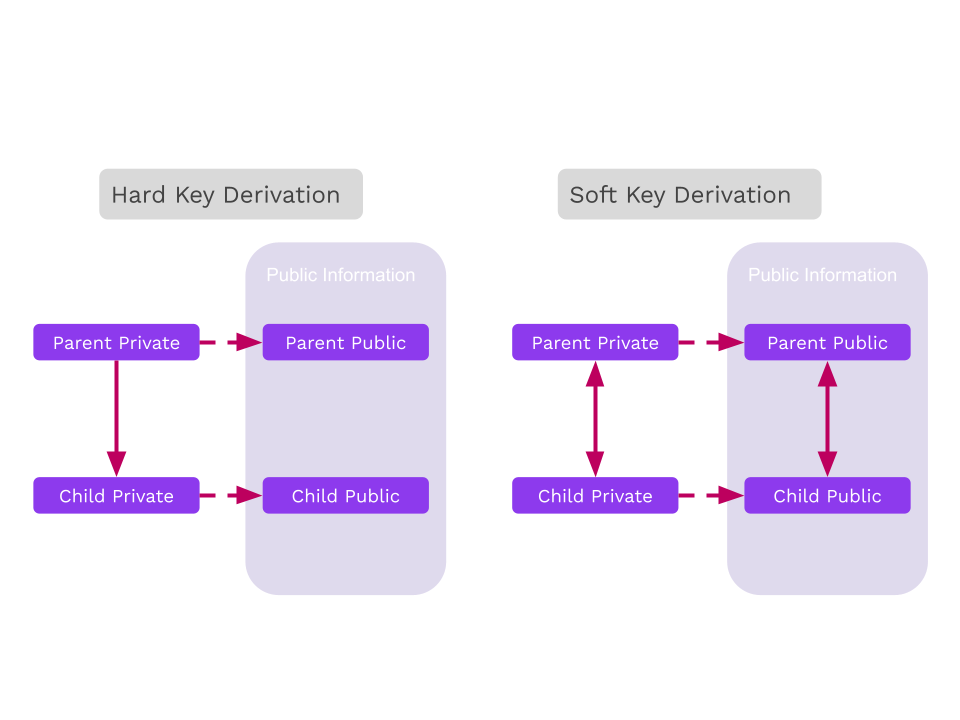

Hard vs. Soft

Key derivation allows one to derive (virtually limitless)

child keys from one "parent".

Derivations can either be "hard" or "soft".

Hard vs. Soft

Hard Derivation

Hard derivation requires the secret key and derives new child secret keys.

Typical "operational security" usages should favor hard derivation over soft derivation because hard derivations avoid leaking the sibling keys, unless the original secret is compromised.

Always do hard paths first, then conclude in soft paths.

Hard Derivation in Wallets

Wallets can derive keys for use in different consensus systems while only needing to back up one secret plus a pattern for child derivation.

Hard Derivation in Wallets

Let's imagine we want to use this key on multiple networks, but we don't want the public keys to be connected to each other.

Subkey Demo

Hard Derivation

Notes:

Hard keys: Take a path (data like a name/index), concatenate with the original key, and hash it for a new key. They reveal nothing about keys above them, and only with the path between it and children could they be recovered.

Soft Derivation

Soft derivation allows one to create derived addresses from only the public key. Contrary to hard derivation, all keys are related.

Notes:

- With any key and the paths to children and. or parents, the public and private keys can be recovered.

- Soft derivations can break some niche advanced protocols, but our sr25519 crate avoids supporting protocols that conflict with soft derivations.

Soft Derivation

- Note that these generate new addresses, but use the same secret seed.

- We can also use the same paths, but only using the Account ID from

//polkadot. It generates the same addresses!

Soft Derivation in Wallets

Wallets can use soft derivation to link all payments controlled by a single private key, without the need to expose the private key for the address derivation.

Use case: A business wants to generate a new address for each payment, but should be able to automatically give customers an address without the secret key owner deriving a new child.

Notes:

On the use case, taking each payment at a different address could help make the association between payment and customer.

See: https://wiki.polkadot.network/docs/learn-accounts#soft-vs-hard-derivation

Subkey Demo

Soft Derivation

Notes:

See the Jupyter notebook and/or HackMD cheat sheet for this lesson.

Mention that these derivations create entirely new secret seeds.

Questions

Subkey Signature and HDKD (Hierarchical Deterministic Key Derivation) Demo

Here are subkey examples for reference on use. Compliments the formal documentation found here.

Key Generation

subkey generate

Secret phrase: desert piano add owner tuition tail melt rally height faint thunder immune

Network ID: substrate

Secret seed: 0x6a0ea68072cfd0ffbabb40801570fa5e9f3a88966eaed9dedaeb0cf140b9cd8d

Public key (hex): 0x7acdc47530002fbc50f413859093b7df90c27874aee732dca940ea4842751d58

Account ID: 0x7acdc47530002fbc50f413859093b7df90c27874aee732dca940ea4842751d58

Public key (SS58): 5Eqipnpt5asTm7sCFWQeJjsNJX5cYVJMid3zjKHjDUGKBJTo

SS58 Address: 5Eqipnpt5asTm7sCFWQeJjsNJX5cYVJMid3zjKHjDUGKBJTo

Sign

echo -n 'Hello Polkadot Blockchain Academy' | subkey sign --suri 'desert piano add owner tuition tail melt rally height faint thunder immune'

Note, this changes each execution, this is one viable signature:

f261d56b80e4b53c70dd2ba1de6b9384d85a8f4c6d912fd86acab3439a47992aa85ded04ac55c7525082dcbc815001cd5cc94ec1a907bbd8e3138cfc8a382683

Verify

echo -n 'Hello Polkadot Blockchain Academy' | subkey verify '0xf261d56b80e4b53c70dd2ba1de6b9384d85a8f4c6d912fd86acab3439a47992aa85ded04ac55c7525082dcbc815001cd5cc94ec1a907bbd8e3138cfc8a382683' \

'0x7acdc47530002fbc50f413859093b7df90c27874aee732dca940ea4842751d58'

Expect

Signature verifies correctly.

Tamper with the Message

Last char in

Public key (hex)- AKA URI - is changed:

echo -n 'Hello Polkadot Blockchain Academy' | subkey verify \

'0xf261d56b80e4b53c70dd2ba1de6b9384d85a8f4c6d912fd86acab3439a47992aa85ded04ac55c7525082dcbc815001cd5cc94ec1a907bbd8e3138cfc8a382683' \

'0x7acdc47530002fbc50f413859093b7df90c27874aee732dca940ea4842751d59'

Error: SignatureInvalid

Hard Derivation

subkey inspect 'desert piano add owner tuition tail melt rally height faint thunder immune//polkadot' --network polkadot

Secret Key URI `desert piano add owner tuition tail melt rally height faint thunder immune//polkadot` is account:

Network ID: polkadot

Secret seed: 0x3d764056127d0c1b4934725cb9faecf00ed0996daa84d24a903b906f319e06bf

Public key (hex): 0xce6ccb0af417ade10062ac9b553d506b67d16c61cd2b6ce85330bc023db7e906

Account ID: 0xce6ccb0af417ade10062ac9b553d506b67d16c61cd2b6ce85330bc023db7e906

Public key (SS58): 15ffBb8rhETizk36yaevSKM2MCnHyuQ8Dn3HfwQFtLMhy9io

SS58 Address: 15ffBb8rhETizk36yaevSKM2MCnHyuQ8Dn3HfwQFtLMhy9io

subkey inspect 'desert piano add owner tuition tail melt rally height faint thunder immune//kusama' --network kusama

Secret Key URI `desert piano add owner tuition tail melt rally height faint thunder immune//kusama` is account:

Network ID: kusama

Secret seed: 0xabd92064a63df86174acfd29ab3204897974f0a39f5d61efdd30099aa5f90bd9

Public key (hex): 0xf62e5d444f89e704bb9b412adc472f990e9a9f40725ac6ff3abee1c9b7625a63

Account ID: 0xf62e5d444f89e704bb9b412adc472f990e9a9f40725ac6ff3abee1c9b7625a63

Public key (SS58): J9753RnTdZJct5RmFQ6gFVdKSyrEjzYwvYUBufMX33PB7az

SS58 Address: J9753RnTdZJct5RmFQ6gFVdKSyrEjzYwvYUBufMX33PB7az

Soft Derivation from Secret

subkey inspect 'desert piano add owner tuition tail melt rally height faint thunder immune//polkadot/0' --network polkadot

Secret Key URI `desert piano add owner tuition tail melt rally height faint thunder immune//polkadot/0` is account:

Network ID: polkadot

Secret seed: n/a

Public key (hex): 0x4e8dfdd8a386ae37b8731dba5480d5cc65739023ea24f1a09d88be1bd9dff86b

Account ID: 0x4e8dfdd8a386ae37b8731dba5480d5cc65739023ea24f1a09d88be1bd9dff86b

Public key (SS58): 12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa

SS58 Address: 12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa

subkey inspect 'desert piano add owner tuition tail melt rally height faint thunder immune//polkadot/1' --network polkadot

Secret Key URI `desert piano add owner tuition tail melt rally height faint thunder immune//polkadot/1` is account:

Network ID: polkadot

Secret seed: n/a

Public key (hex): 0x2e8b3090b17b12ea63029f03d852af71570e8e526690cc271491318a45785e33

Account ID: 0x2e8b3090b17b12ea63029f03d852af71570e8e526690cc271491318a45785e33

Public key (SS58): 1242YwUZGBQ84btGSGdSX4swf1ibfSaCDR1sr1ejC9KQ1NbJ

SS58 Address: 1242YwUZGBQ84btGSGdSX4swf1ibfSaCDR1sr1ejC9KQ1NbJ

Soft Derivation from Public

Note: We use addresses here because Subkey does not derive paths from a raw public key (AFAIK).

subkey inspect 12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa/0

Public Key URI `12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa/0` is account:

Network ID/Version: polkadot

Public key (hex): 0x40f22875159420aca51178d1baf2912c18dcb83737dd7bd39dc6743da326dd1c

Account ID: 0x40f22875159420aca51178d1baf2912c18dcb83737dd7bd39dc6743da326dd1c

Public key (SS58): 12UA12xuDnEkEsEDrR4T4Cf3S1Hyi2C7B6hJW8LTkcsZy8BX

SS58 Address: 12UA12xuDnEkEsEDrR4T4Cf3S1Hyi2C7B6hJW8LTkcsZy8BX

subkey inspect 12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa/1

Public Key URI `12mzv68gS8Zu2iEdt4Ktkt48JZSKyFSkAVjvtgYhoa42NLNa/1` is account:

Network ID/Version: polkadot

Public key (hex): 0xc62ec5cd7d83e1f41462d455bb47b6bad9ed5a14741a920ead8366c63746391b

Account ID: 0xc62ec5cd7d83e1f41462d455bb47b6bad9ed5a14741a920ead8366c63746391b

Public key (SS58): 15UrNnNSMpX49F3mWcCX7y4kMGcvnQxCabLMT3d8U5abpwr3

SS58 Address: 15UrNnNSMpX49F3mWcCX7y4kMGcvnQxCabLMT3d8U5abpwr3

Hash Functions

How to use the slides - Full screen (new tab)

Hash Functions

Introduction

We often want a succinct representation of some data

with the expectation that we are referring to the same data.

A "fingerprint"

Hash Function Properties

- Accept unbounded size input

- Map to a bounded output

- Be fast to compute

- Be computable strictly one-way

(difficult to find a pre-image for a hash) - Resist pre-image attacks

(attacker controls one input) - Resist collisions

(attacker controls both inputs)

Hash Function API

A hash function should:

- Accept an unbounded input size (

[u8]byte array) - Return a fixed-length output (here, a 32 byte array).

#![allow(unused)] fn main() { fn hash(s: &[u8]) -> [u8; 32]; }

Example

Short input (5 bytes):

hash('hello') =

0x1c8aff950685c2ed4bc3174f3472287b56d9517b9c948127319a09a7a36deac8

Large input (1.2 MB):

hash(Harry_Potter_series_as_string) =

0xc4d194054f03dc7155ccb080f1e6d8519d9d6a83e916960de973c93231aca8f4

Input Sensitivity

Changing even 1 bit of a hash function completely scrambles the output.

hash('hello') =

0x1c8aff950685c2ed4bc3174f3472287b56d9517b9c948127319a09a7a36deac8

hash('hellp') =

0x7bc9c272894216442e0ad9df694c50b6a0e12f6f4b3d9267904239c63a7a0807

Rust Demo

Hashing a Message

Notes:

See the Jupyter notebook and/or HackMD cheat sheet for this lesson.

- Use a longer message

- Hash it

- Verify the signature on the hash

Speed

Some hash functions are designed to be slow.

These have applications like password hashing, which would slow down brute-force attackers.

For our purposes, we generally want them to be fast.

Famous Hash Algorithms

- xxHash a.k.a TwoX (non-cryptographic)

- MD5

- SHA1

- RIPEMD-160

- SHA2-256 (aka SHA256) &c.

- SHA3

- Keccak

- Blake2

xxHash64 is about 20x faster than Blake2.

Hash Functions in Blockchains

- Bitcoin: SHA2-256 & RIPMD-160

- Ethereum: Keccak-256 (though others supported via EVM)

- Polkadot: Blake2 & xxHash (though others supported via host functions)

Notes:

Substrate also implements traits that provide 160, 256, and 512 bit outputs for each hasher.

Exercise: Write your own benchmarking script that compares the performance of these algorithms with various input sizes.

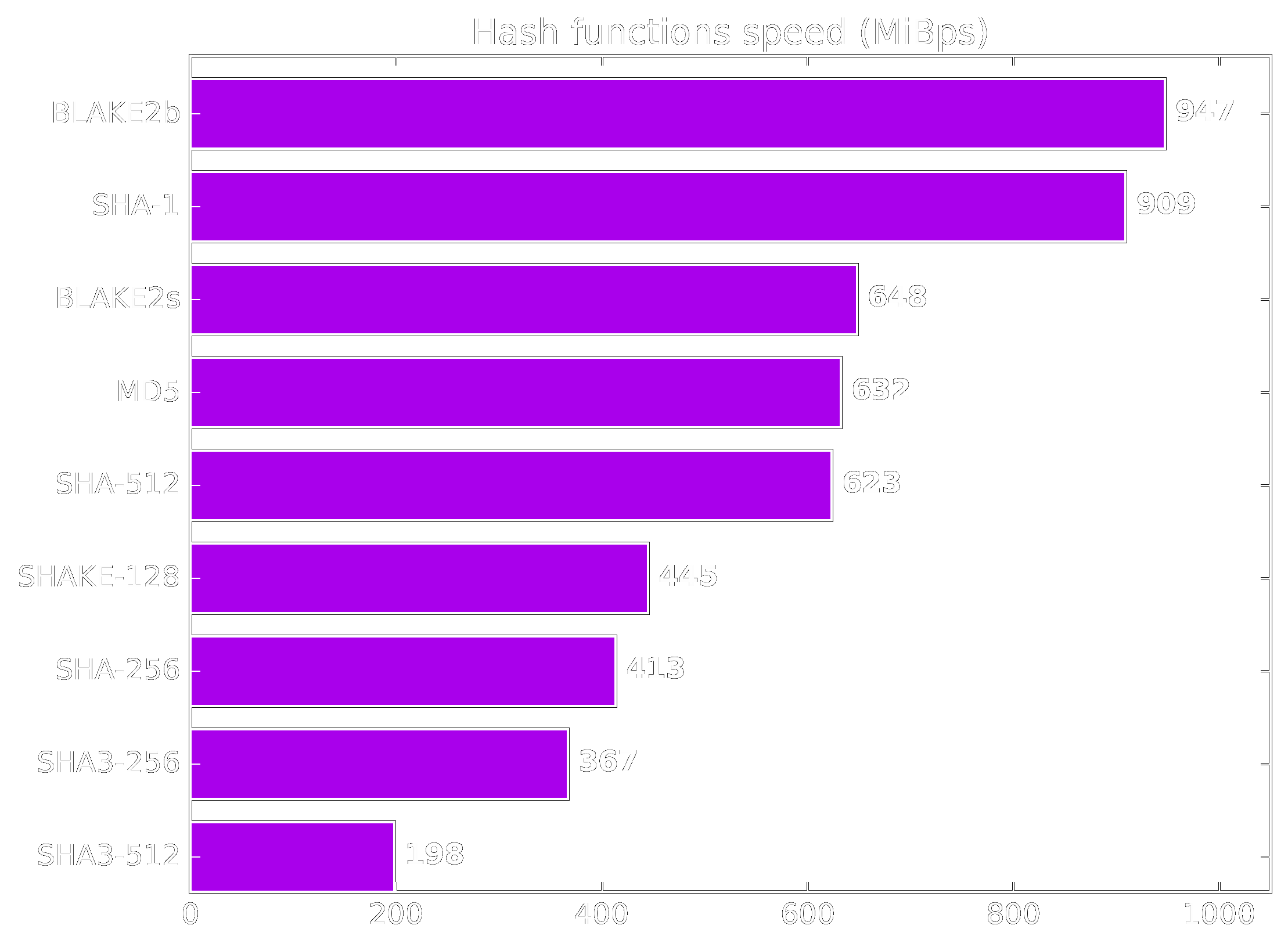

Hashing Benchmarks

Notes:

Benchmarks for the cryptographic hashing algorithms. Source: https://www.blake2.net/

XXHash - Fast hashing algorithm

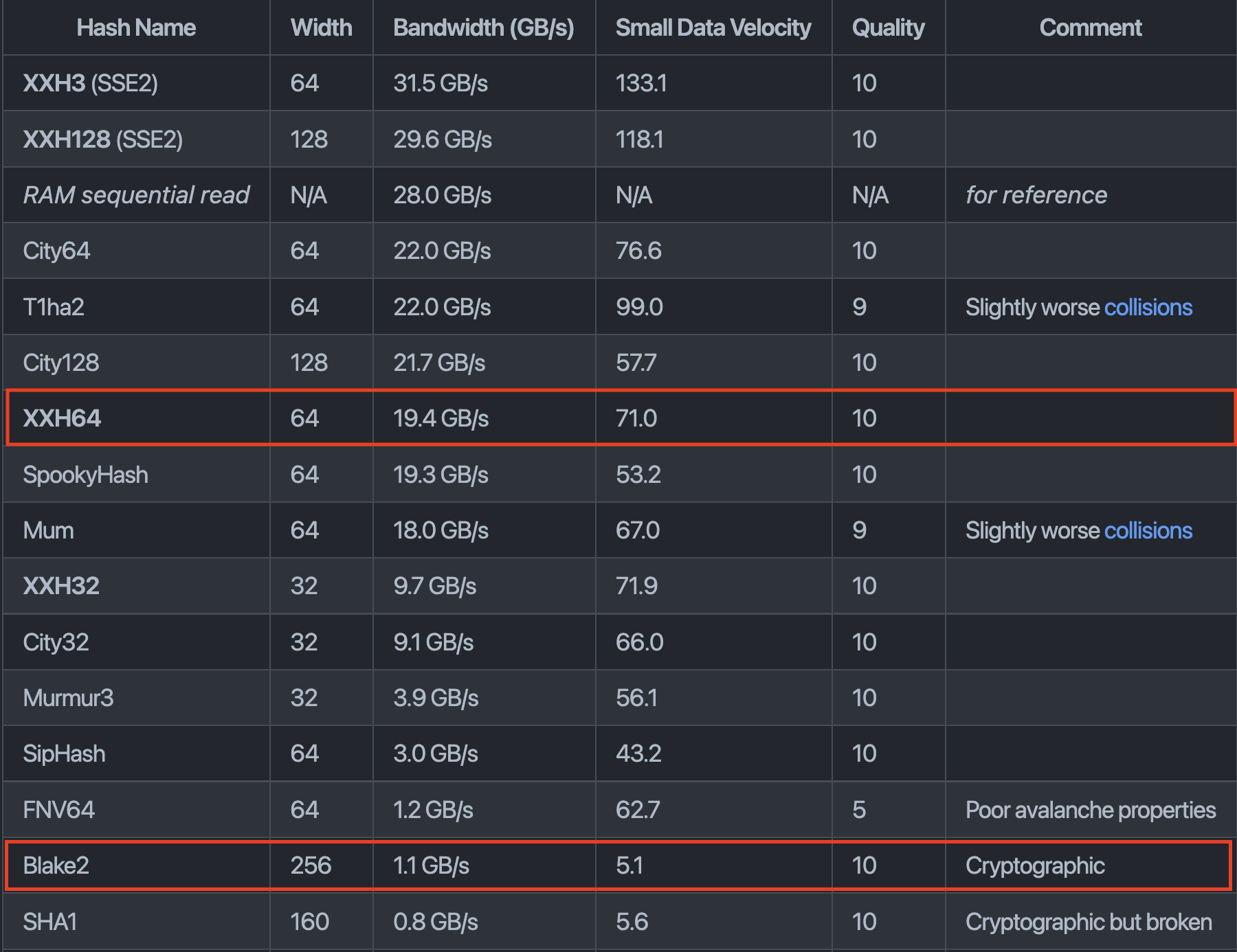

Notes:

Benchmarks for the XX-hash algorithms. Source: https://github.com/Cyan4973/xxHash#benchmarks

Non-Cryptographic Hash Functions

Non-cryptographic hash functions provide weaker

guarantees in exchange for performance.

They are OK to use when you know that the input is not malicious.

If in doubt, use a cryptographic hash function.

One Way

Given a hash, it should be difficult to find an input value (pre-image)

that would produce the given hash.

That is, given H(x), it should be difficult to find x.

Notes:

We sometimes add random bytes to pre-images to prevent guesses based on context (e.g., if you are hashing "rock, paper, scissors", then finding a pre-image is trivial without some added randomness.)

Second Pre-Image Attacks

Given a hash and a pre-image, it should be difficult to find another

pre-image that would produce the same hash.

Given H(x), it should be difficult to find any x'

such that H(x) == H(x').

Notes:

Since most signature schemes perform some internal hashing, this second pre-image would also pass signature verification.

Collision Resistance

It should be difficult for someone to find two messages that

hash to the same value.

It should be difficult to find an x and y

such that H(x) == H(y).

Collision Resistance

Difference from second pre-image attack:

In a second pre-image attack, the attacker only controls one input.

In a collision, the attacker controls both inputs.

They may attempt to trick someone into signing one message.

Notes:

Attacker has intention to impersonate the signer with the other. Generally speaking, even finding a single hash collision often results in the hash function being considered unsafe.

Birthday Problem

With 23 people, there is a 6% chance that someone will be born on a specific date, but a 50% chance that two share a birthday.

- Must compare each output with every other, not with a single one.

- Number of possible "hits" increases exponentially for more attempts, reducing the expected success to the square-root of what a specific target would be.

Birthday Attack

Thus, with a birthday attack, it is possible to find a collision of a hash function in $\sqrt {2^{n}}=2^{^{\frac{n}{2}}}$, with $\cdot 2^{^{\frac{n}{2}}}$ being the classical preimage resistance security.

So, hash function security is only half of the bit space.

Notes:

e.g., a 256 bit hash output yields 2^128 security

Partial Resistance

It should be difficult for someone to partially (for a substring of the hash output) find a collision or "second" pre-image.

- Bitcoin PoW is a partial pre-image attack.

- Prefix/suffix pre-image attack resistance reduces opportunity for UI attacks for address spoofing.

- Prefix collision resistance important to rationalize costs for some cryptographic data structures.

Hash Function Selection

When users (i.e. attackers) have control of the input, cryptographic hash functions must be used.

When input is not controllable (e.g. a system-assigned index), a non-cryptographic hash function can be used and is faster.

Notes:

Only safe when the users cannot select the pre-image, e.g. a system-assigned index.

Keccak is available for Ethereum compatibility.

Applications

Cryptographic Guarantees

Let's see which cryptographic properties apply to hashes.

---v

Confidentiality

Sending or publically posting a hash of some data $D$ keeps $D$ confidential, as only those who already knew $D$ recognize $H(D)$ as representing $D$.

Both cryptographic and non-cryptographic hashes work for this. only if the input space is large enough.

---v

Confidentiality Bad Example

Imagine playing rock, paper, scissors by posting hashes and then revealing. However, if the message is either "rock", "paper", or "scissors", the output will always be either:

hash('rock') = 0x10977e4d68108d418408bc9310b60fc6d0a750c63ccef42cfb0ead23ab73d102

hash('paper') = 0xea923ca2cdda6b54f4fb2bf6a063e5a59a6369ca4c4ae2c4ce02a147b3036a21

hash('scissors') = 0x389a2d4e358d901bfdf22245f32b4b0a401cc16a4b92155a2ee5da98273dad9a

The other player doesn't need to undo the hash function to know what you played!

Notes:

The data space has to be sufficiently large. Adding some randomness to input of the hash fixes this. Add x bits of randomness to make it x bits of security on that hash.

---v

Authenticity

Anyone can make a hash, so hashes provide no authenticity guarantees.

---v

Integrity

A hash changes if the data changes, so it does provide integrity.

---v

Non-Repudiation

Hashes on their own cannot provide authenticity, and as such cannot provide non-repudiation.

However, if used in another cryptographic primitive that does provide non-repudiation, $H(D)$ provides the same non-repudation as $D$ itself.

Notes:

This is key in digital signatures. However, it's important to realize that if $D$ is kept secret, $H(D)$ is basically meaningless.

Content-Derived Indexing

Hash functions can be used to generate deterministic

and unique lookup keys for databases.

Notes:

Given some fixed property, like an ID and other metadata the user knows beforehand, they can always find the database entry with all of the content they are looking for.

Data Integrity Checks

Members of a peer-to-peer network may host and share

file chunks rather than large files.

In Bittorrent, each file chunk is hash identified so peers can

request and verify the chunk is a member of the larger,

content addressed file.

Notes:

The hash of the large file can also serve as a signal to the protocol that transmission is complete.

Account Abstractions

Public keys can be used to authorize actions by signing of instructions.

The properties of hash functions allow other kinds of representations.

Public Key Representation

Because hashes serve as unique representations of other data,

that other data could include public keys.

A system can map a plurality of key sizes to a fixed length

(e.g. for use as a database key).

For example, the ECDSA public key is 33 bytes:

Public key (hex):

0x02d82cdc83a966aaabf660c4496021918466e61455d2bc403c34bde8148b227d7a

Hash of pub key:

0x8fea32b38ed87b4739378aa48f73ea5d0333b973ee72c5cb7578b143f82cf7e9

^^

Commitment Schemes

It is often useful to commit to some information

without storing or revealing it:

- A prediction market would want to reveal predictions only after the confirming/refuting event occurred.

- Users of a system may want to discuss proposals without storing the proposal on the system.

However, participants should not be able to modify their predictions or proposals.

Commit-Reveal

- Share a hash of data as a commitment ($c$)

- Reveal the data itself ($d$)

It is normal to add some randomness to the message

to expand the input set size:

$$ hash(message + randomness) => commitment $$

Commitment: 0x97c9b8d5019e51b227b7a13cd2c753cae2df9d3b435e4122787aff968e666b0b

Reveal

Message with some added randomness:

"I predict Boris Johnson will resign on 7 July 2022. facc8d3303c61ec1808f00ba612c680f"

Data Identifiers

Sometimes people want to store information in one place and reference it in another. For reference, they need some "fingerprint" or digest.

As an example, they may vote on executing some privileged instructions within the system.

The hash of the information can succinctly represent the information and commit its creator to not altering it.

Data Structures (in Brief)

This is the focus of a later lesson.

Notes: For now, just a brief introduction.

Pointer-Based Linked Lists

Pointer-based linked lists are a foundation of programming.

But pointers are independent from the data they reference,

so the data can be modified while maintaining the list.

That is, pointer-based linked lists are not tamper evident.

Hash-Based Linked Lists

Hash-based lists make the reference related to the data they are referencing.

The properties of hash functions make them a good choice for this application.

Any change at any point in the list would create downstream changes to all hashes.

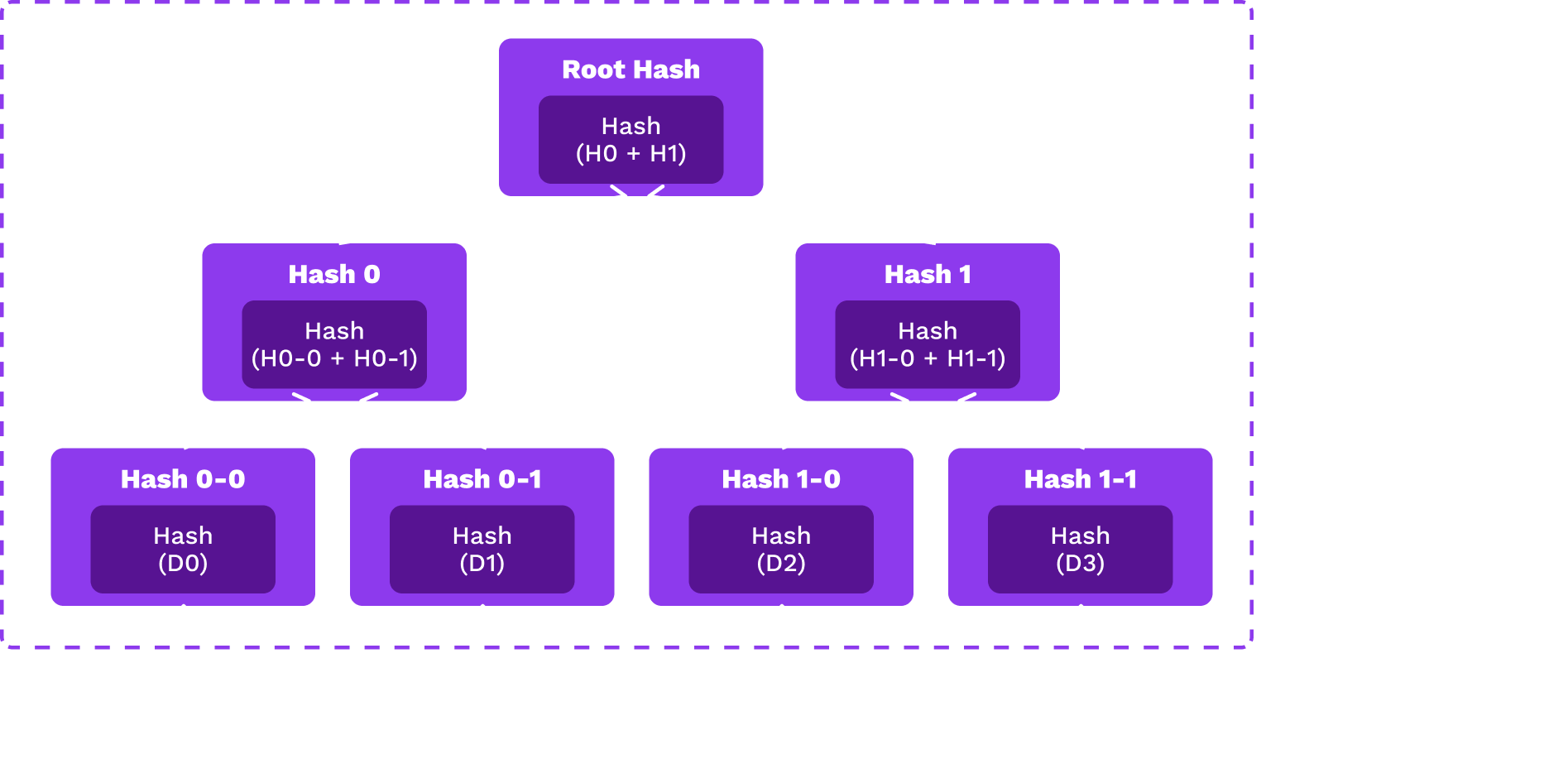

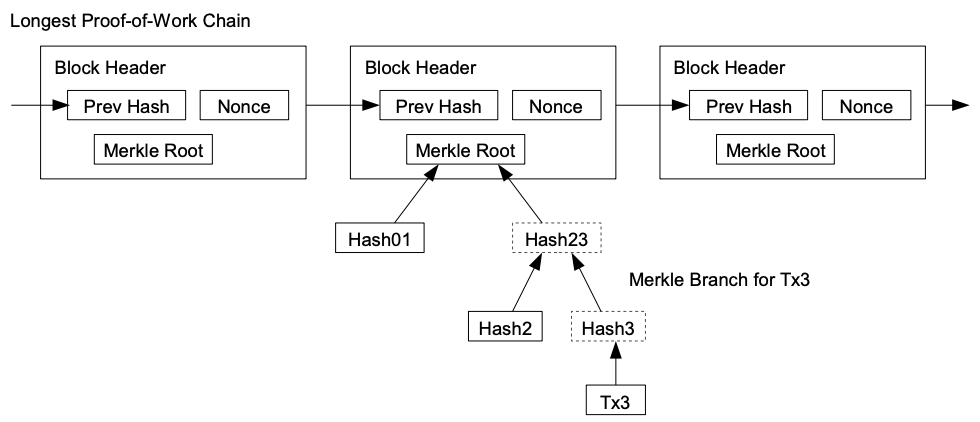

Merkle Trees

Notes:

Each leaf is the hash of some data object and each node is the hash of its children.

Proofs

Merkle trees allow many proofs relevant to the rest of this course,

e.g. that some data object is a member of the tree

without passing the entire tree.

More info in the next lesson.

Questions

Hash Examples in Substrate

Sr25519 Signatures

Sr25519 hashes the message as part of its signing process.

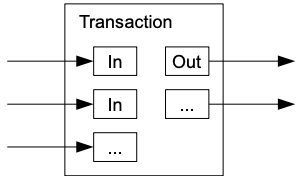

Transactions

In transactions in Substrate, key holders sign a

hash of the instructions when the instructions

are longer than 256 bytes.

Database Keys

TwoX64 is safe to use when users (read: attackers)

cannot control the input, e.g. when a

database key is a system-assigned index.

Blake2 should be used for everything else.

Again, there is a whole lesson on hash-based data structures.

Other Uses of Hashes in Substrate

Hashes are also used for:

- Generating multisig accounts

- Generating system-controlled accounts

- Generating proxy-controlled accounts

- Representing proposals

- Representing claims (e.g. the asset trap)

Activity: Crack the Many Time Pad

Instructors: there is a private guide associated with this activity to assist with hints and further details, be sure to review this before starting.

Introduction

The symmetric one-time pad is known to be secure when the key is only used once. In practice key distribution is not always practical, and users sometimes make the critical mistake of reusing a pre-shared key.

In this activity, you will experience first hand why reusing the key is detrimental to security.

The Challenge

The following several ciphertexts were intercepted on a peer-to-peer communication channel:

- Messages definitively originate in the USA, destined for the UK.

- Each line contains one hex encoded message, in it's entirety.

- We believe all messages were encrypted with the same key.

Your task is to use cryptanalysis to recover the plaintexts of all messages, as well as the encryption key used for them.

160111433b00035f536110435a380402561240555c526e1c0e431300091e4f04451d1d490d1c49010d000a0a4510111100000d434202081f0755034f13031600030d0204040e

050602061d07035f4e3553501400004c1e4f1f01451359540c5804110c1c47560a1415491b06454f0e45040816431b144f0f4900450d1501094c1b16550f0b4e151e03031b450b4e020c1a124f020a0a4d09071f16003a0e5011114501494e16551049021011114c291236520108541801174b03411e1d124554284e141a0a1804045241190d543c00075453020a044e134f540a174f1d080444084e01491a090b0a1b4103570740

000000000000001a49320017071704185941034504524b1b1d40500a0352441f021b0708034e4d0008451c40450101064f071d1000100201015003061b0b444c00020b1a16470a4e051a4e114f1f410e08040554154f064f410c1c00180c0010000b0f5216060605165515520e09560e00064514411304094c1d0c411507001a1b45064f570b11480d001d4c134f060047541b185c

0b07540c1d0d0b4800354f501d131309594150010011481a1b5f11090c0845124516121d0e0c411c030c45150a16541c0a0b0d43540c411b0956124f0609075513051816590026004c061c014502410d024506150545541c450110521a111758001d0607450d11091d00121d4f0541190b45491e02171a0d49020a534f

031a5410000a075f5438001210110a011c5350080a0048540e431445081d521345111c041f0245174a0006040002001b01094914490f0d53014e570214021d00160d151c57420a0d03040b4550020e1e1f001d071a56110359420041000c0b06000507164506151f104514521b02000b0145411e05521c1852100a52411a0054180a1e49140c54071d5511560201491b0944111a011b14090c0e41

0b4916060808001a542e0002101309050345500b00050d04005e030c071b4c1f111b161a4f01500a08490b0b451604520d0b1d1445060f531c48124f1305014c051f4c001100262d38490f0b4450061800004e001b451b1d594e45411d014e004801491b0b0602050d41041e0a4d53000d0c411c41111c184e130a0015014f03000c1148571d1c011c55034f12030d4e0b45150c5c

011b0d131b060d4f5233451e161b001f59411c090a0548104f431f0b48115505111d17000e02000a1e430d0d0b04115e4f190017480c14074855040a071f4448001a050110001b014c1a07024e5014094d0a1c541052110e54074541100601014e101a5c

0c06004316061b48002a4509065e45221654501c0a075f540c42190b165c

00000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000000

Instructions

-

Team up with 2-4 students to complete this activity.

-

Briefly inspect the ciphertext to see if you can identify patterns that may hint some things about their origin.

-

Research based on what we know bout the messages to find clues to help come up with a theory and game plan to complete your task.

-

Write a program in Rust that finds the key to generate the plaintext from the provided cipher texts.

The general steps are:- Find the length of the longest input cipher text.

- Generate a key of that length.

- Find what the correct key is...

Note that this task is intended to be a bit vague, give it your best effort. We will be sharing hints as time progresses for everyone. Don't hesitate to ask for support if you're feeling stuck, or just ask your peers!

Finished?

Once complete, let a faculty know!

One last ciphertext using the same key that should prove tricky:

1f3cb1f3e01f3fd1f3ea1f3e61f3e01f3e71f3b31f3a91f3c81f3a91f3f91f3fc1f3fb1f3ec1f3e51f3f01f3a91f3f91f3ec1f3ec526e1b014a020411074c17111b1c071c4e4f0146430d0d08131d1d010707040017091648461e1d0618444f074c010e19594f0f1f1a07024e1d041719164e1c1652114f411645541b004e244f080213010c004c3b4c0911040e480e070b00310213101c4d0d4e00360b4f151a005253184913040e115454084f010f114554111d1a550f0d520401461f3e01f3e71f3e81f3e71f3ea1f3e01f3e81f3e51f3a91f3e01f3e71f3fa1f3fd1f3e01f3fd1f3fc1f3fd1f3e01f3e61f3e71f3a7

Notice a pattern? why might that be... 🤔

If you want more to do, find ways to improve your solution, perhaps:

- Create a tool that automates the cipher key generation.

- Add a way to generate new cipher texts.

- Create your own cipher texts using other cipher methods.

- Provide a new set of ciphertexts that were intentionally constructed not to use the most common English words.

Citation

This activity is cribbed from Dan Boneh's Coursera Cryptography I course.

Encryption

How to use the slides - Full screen (new tab)

Encryption

Goals for this lesson

- Learn about the differences between symmetric and asymmetric encryption.

Symmetric Cryptography

Symmetric encryption assumes all parties begin with some shared secret information, a potentially very difficult requirement.

The shared secret can then be used to protect further communications from others who do not know this secret.

In essence, it gives a way of extending a shared secret over time.

Symmetric Encryption

Examples: ChaCha20, Twofish, Serpent, Blowfish, XOR, DES, AES

Symmetric Encryption API

Symmetric encryption libraries should generally all expose some basic functions:

fn generate_key(r) -> k;

Generate ak(secret key) from some inputr.fn encrypt(k, msg) -> ciphertext;

Takeskand a message; returns the ciphertext.fn decrypt(k, ciphertext) -> msg;

Takeskand a ciphertext; returns the original message.

It always holds that decrypt(k, encrypt(k, msg)) == msg.

Notes:

The input r is typically a source of randomness, for example the movement pattern of a mouse.

Symmetric Encryption Guarantees

Provides:

- Confidentiality

- Authenticity*

Does not provide:

- Integrity*

- Non-Repudiation

Notes:

- Authenticity: The message could only be sent by someone who knows the shared secret key. In most cases, this is functionally authentication to the receiving party.

- Integrity: There is no proper integrity check, however the changed section of the message will be gibberish if it has been changed. Detection of gibberish could function as a form of integrity-checking.

Non-repudiation for Symmetric Encryption

There is cryptographic proof that the secret was known to the producer of the encrypted message.

However, knowledge of the secret is not restricted to one party: Both (or all) parties in a symmetrically encrypted communication know the secret. Additionally, in order to prove this to anyone, they must also gain knowledge of the secret.

Notes:

The degree of non-repudiation given by pure symmetric crytography is not very useful.

Symmetric Encryption

Example: XOR Cipher

The encryption and decryption functions are identical: applying a bitwise XOR operation with a key.

Plain: 1010 -->Cipher: 0110

Key: 1100 | 1100

---- | ----

0110--^ 1010

Notes:

A plaintext can be converted to ciphertext, and vice versa, by applying a bitwise XOR operation with a key known to both parties.

Symmetric Encryption

⚠ Warning ⚠

We typically expect symmetric encryption to preserve little about the original plaintext. We caution however that constructing these protocols remains delicate, even given secure primitives, with two classical examples being unsalted passwords and the ECB penguin.

ECB penguin

Original image

Encrypted image

(by blocks)

Encrypted image

(all at once)

Notes:

The ECB penguin shows what can go wrong when you encrypt a small piece of data, and do this many times with the same key, instead of encrypting data all at once.

Image sources: https://github.com/robertdavidgraham/ecb-penguin/blob/master/Tux.png and https://github.com/robertdavidgraham/ecb-penguin/blob/master/Tux.ecb.png and https://upload.wikimedia.org/wikipedia/commons/5/58/Tux_secure.png

Asymmetric Encryption

- Assumes the sender does not know the recipient's secret "key" 🎉😎

- Sender only knows a special identifier of this secret

- Messages encrypted with the special identifier can only be decrypted with knowledge of the secret.

- Knowledge of this identifier does not imply knowledge of the secret, and thus cannot be used to decrypt messages encrypted with it.

- For this reason, the identifier may be shared publicly and is known as the public key.

Asymmetric Encryption

Why "Asymmetric"?

Using only the public key, information can be transformed ("encrypted") such that only those with knowledge of the secret are able to inverse and regain the original information.

i.e. Public key is used to encrypt but a different, secret, key must be used to decrypt.

Asymmetric Encryption API

Asymmetric encryption libraries should generally all expose some basic functions:

fn generate_key(r) -> sk;

Generate ask(secret key) from some inputr.fn public_key(sk) -> pk;

Generate apk(public key) from the private keysk.fn encrypt(pk, msg) -> ciphertext;

Takes the public key and a message; returns the ciphertext.fn decrypt(sk, ciphertext) -> msg;

For the inputsskand a ciphertext; returns the original message.

It always holds that decrypt(sk, encrypt(public_key(sk), msg)) == msg.

Notes:

The input r is typically a source of randomness, for example the movement pattern of a mouse.

Asymmetric Encryption Guarantees

Provides:

- Confidentiality

Does not provide:

- Integrity*

- Authenticity

- Non-Repudiation

Notes:

- Authenticity: The message could only be sent by someone who knows the shared secret key. In most cases, this is functionally authentication to the receiving party.

- Integrity: There is no proper integrity check, however the changed section of the message will be gibberish if it has been changed. Detection of gibberish could function as a form of integrity-checking.

Diffie-Hellman Key Exchange

Mixing Paint Visualization

Notes:

Mixing paint example. Image Source: https://upload.wikimedia.org/wikipedia/commons/4/46/Diffie-Hellman_Key_Exchange.svg

Authenticated Encryption

Authenticated encryption adds a Message Authentication Code to additionally provide an authenticity and integrity guarantee to encrypted data.

A reader can check the MAC to ensure the message was constructed by someone knowing the secret.

Notes:

Specifically, this authenticity says that anyone who does not know the sender's secret could not construct the message.

Generally, this adds ~16-32 bytes of overhead per encrypted message.

AEAD (Authenticated Encryption Additional Data)

AEAD is authenticated with some extra data which is unencrypted, but does have integrity and authenticity guarantees.

Notes:

Authenticated encryption and AEAD can work with both symmetric and asymmetric cryptography.

AEAD Example

Imagine a table with encrypted medical records stored in a table, where the data is stored using AEAD. What are the advantages of such a scheme?

UserID -> Data (encrypted), UserID (additional data)

Notes: By using this scheme, the data is always associated with the userID. An attacker could not put that entry into another user's entry.

Hybrid Encryption

Hybrid encryption combines the best of all worlds in encryption. Asymmetric encryption establishes a shared secret between the sender and a specific public key, and then uses symmetric encryption to encrypt the actual message. It can also be authenticated.

Notes:

In practice, asymmetric encryption is almost always hybrid encryption.

Cryptographic Properties

| Property | Symmetric | Asymmetric | Authenticated | Hybrid + Authenticated |

|---|---|---|---|---|

| Confidentiality | Yes | Yes | Yes | Yes |

| Authenticity | Yes* | No | Yes* | Yes |

| Integrity | No* | No* | Yes | Yes |

| Non-repudiation | No | No* | No | No* |

Notes:

- Symmetric-Authentication and Authenticated-Authenticity: The message could only be sent by someone who knows the shared secret key. In most cases, this is functionally authentication to the receiving party.

- Symmetric-Integrity and Asymmetric-Integrity: There is no proper integrity check, however the message will be gibberish if it has been changed. Detection of gibberish could function as a form of integrity-checking.

- Non-Repudation: Even though none of these primitives provide non-repudiation on their own, it's very possible to add non-repudation to asymmetric and hybrid schemes via signatures.

- Note that encryption also, most importantly, makes the data available to everyone who should have access.

Questions

Digital Signature Basics

How to use the slides - Full screen (new tab)

Digital Signatures Basics

Signature API

Signature libraries should generally all expose some basic functions:

fn generate_key(r) -> sk;

Generate ask(secret key) from some inputr.fn public_key(sk) -> pk;

Return thepk(public key) from ask.fn sign(sk, msg) -> signature;

Takesskand a message; returns a digital signature.fn verify(pk, msg, signature) -> bool;

For the inputspk, a message, and a signature; returns whether the signature is valid.

Notes:

The input r could be anything, for example the movement pattern of a mouse.

For some cryptographies (ECDSA), the verify might not take in the public key as an input. It takes in the message and signature, and returns the public key if it is valid.

Subkey Demo

Key Generation and Signing

Notes:

See the Jupyter notebook and/or HackMD cheat sheet for this lesson.

- Generate a secret key

- Sign a message

- Verify the signature

- Attempt to alter the message

Hash Functions

There are two lessons dedicated to hash functions.

But they are used as part of all signing processes.

For now, we only concern ourselves with using Blake2.

Hashed Messages

As mentioned in the introduction,

it's often more practical to sign the hash of a message.

Therefore, the sign/verify API may be used like:

fn sign(sk, H(msg)) -> signature;fn verify(pk, H(msg), signature) -> bool;

Where H is a hash function (for our purposes, Blake2).

This means the verifier will need to run the correct hash function on the message.

Cryptographic Guarantees

Signatures provide many useful properties:

- Confidentiality: Weak, the same as a hash

- Authenticity: Yes

- Integrity: Yes

- Non-repudiation: Yes

Notes:

If a hash is signed, you can prove a signature is valid without telling anyone the actual message that was signed, just the hash.

Signing Payloads

Signing payloads are an important part of system design.

Users should have credible expectations about how their messages are used.

For example, when a user authorizes a transfer,

they almost always mean just one time.

Notes:

There need to be explicit rules about how a message is interpreted. If the same signature can be used in multiple contexts, there is the possibility that it will be maliciously resubmitted.

In an application, this typically looks like namespacing in the signature payload.

Signing and Verifying

Notes:

Note that signing and encryption are not inverses.

Replay Attacks

Replay attacks occur when someone intercepts and resends a valid message.

The receiver will carry out the instructions since the message contains a valid signature.

- Since we assume that channels are insecure, all messages should be considered intercepted.

- The "receiver", for blockchain purposes, is actually an automated system.

Notes:

Lack of context is the problem. Solve by embedding the context and intent _within the message being signed. Tell the story of Ethereum Classic replays.

Replay Attack Prevention

Signing payloads should be designed so that they can

only be used one time and in one context.

Examples:

- Monotonically increasing account nonces

- Timestamps (or previous blocks)

- Context identifiers like genesis hash and spec versions

Signature Schemes

ECDSA

- Uses Secp256k1 elliptic curve.

- ECDSA (used initially in Bitcoin/Ethereum) was developed to work around the patent on Schnorr signatures.

- ECDSA complicates more advanced cryptographic techniques, like threshold signatures.

- Nondeterministic

Ed25519

- Schnorr signature designed to reduce mistakes in implementation and usage in classical applications, like TLS certificates.

- Signing is 20-30x faster than ECDSA signatures.

- Deterministic

Sr25519

Sr25519 addresses several small risk factors that emerged

from Ed25519 usage by blockchains.

Use in Substrate

- Sr25519 is the default key type in most Substrate-based applications.

- Its public key is 32 bytes and generally used to identify key holders (likewise for ed25519).

- Secp256k1 public keys are 33 bytes, so their hash is used to represent their holders.

Questions

Advanced Digital Signatures

How to use the slides - Full screen (new tab)

Advanced Digital Signatures

Certificates

A certificate is essentially a witness statement concerning one or more public keys. It is a common usage of digital signatures, but it is not a cryptographic primitive!

Notes:

A certificate is one issuing key signing a message containing another certified key, which attests to some properties or relationship about the certified key.

We must already trust the issuing key to give this attestation any significance, traditionally provided under "Certificate Authority" or "Web of Trust" schemes.

Certificates

A certification system specified conventions on who is allowed to issue certificates, the rules over their issuance (e.g. time limits and revocation) as well as their format and semantics.

For example, the certificate transparency protocol for TLS certificates helps protect against compromised Certificate Authorities.

Notes:

Certificate transparency: explanation and dashboard

- Maybe mention PGP web-of-trust style schemes

Certificates in Web3

We are building systems that don't have a "Certificate Authority".

But we can still use certificates in some niche instances.

Notes:

Potential example to give verbally:

- Session keys are a set of keys that generally run in online infrastructure. An account, whose keys are protected, can sign a transaction to certify all the keys in the set.

- Session keys are used to sign operational messages, but also in challenge-response type games to prove availability by signing a message.

Multi-Signatures

We often want signatures that must be signed

by multiple parties to become valid.

- Require some threshold of members to

agree to a message - Protect against key loss

Types of Multi-Signature

- Verifier enforced

- Cryptographic threshold

- Cryptographic non-threshold

(a.k.a. signature aggregation)

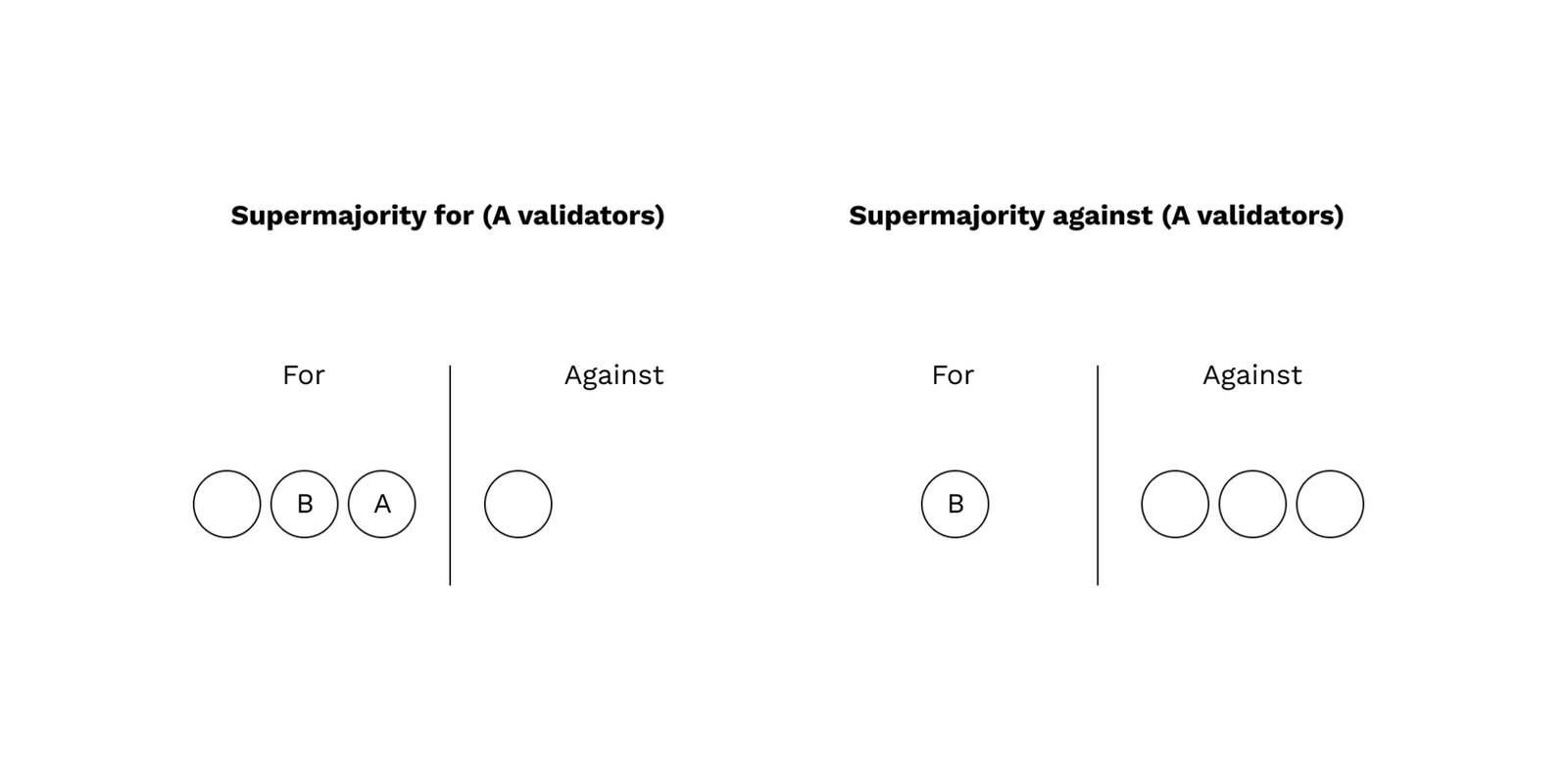

Verifier Enforced Multiple Signatures

We assume that there is some verifier, who can check that some threshold of individual keys have provided valid signatures.

This could be a trusted company or third party. For our purposes, it's a blockchain.

Verifier Enforced Multiple Signatures

Multiple signatures enforced by a verifier generally provide a good user experience, as no interaction is required from the participants.

Notes:

This good experience comes at the cost of using state and more user interactions with the system, but is generally low.

Even in a web3 system, the verifier can be distinct from the blockchain. 5 people can entrust a verifier with the identity of "all 5 signed this" associated to a verifier-owned private key.

Cryptographic Multi-Sigs

We want a succinct way to demonstrate that everyone from some set of parties have signed a message. This is achieved purely on the signer side (without support from the verifier).

Example: "The five key holders have signed this message."

Key Generation for Multi-Sigs

In regular multi-signatures,

signatures from individual public keys are aggregated.

Each participant can choose their own key to use for the multi-signature.

Notes:

In some cases, a security requirement of these systems is that every participant demonstrates ownership of the public key submitted for the multi-signature, otherwise security can be compromised.

Cryptographic Threshold Multi-Sigs

This makes more compact signatures compatible with legacy systems. Unlike a regular multi-sig, the public key is associated with a threshold number of signing parties, so not all parties are needed to take part in the signing process to create a valid signature.

This requires MPC protocols and may need multiple rounds of interaction to generate the final signature. They may be vulnerable to DoS from a malfunctioning (or malicious) key-holder.

Example: "5 of 7 key holders have signed this message."

Notes:

These require multi-party computation (MPC) protocols, which add some complexity for the signing users.

Key Generation - Threshold

Threshold multi-signature schemes require that all signers run a distributed key generation (DKG) protocol that constructs key shares.

The secret encodes the threshold behavior, and signing demands some threshold of signature fragments.

This DKG protocol breaks other useful things, like hard key derivation.

Schnorr Multi-Sigs

Schnorr signatures are primarily used for threshold multi-sig.

- Fit legacy systems nicely, and can reduce fees on blockchains.

- Reduce verifier costs in bandwidth & CPU time, so great for certificates.

- Could support soft key derivations.

Schnorr Multi-Sigs

However, automation becomes tricky.

We need agreement upon the final signer list and two random nonce contributions from each prospective signer, before constructing the signature fragments.

BLS Signatures

BLS signatures are especially useful for aggregated (non-threshold) multi-signatures (but can be used for threshold as well).

Signatures can be aggregated without advance agreement upon the signer list, which simplifies automation and makes them useful in consensus.

Verifying individual signatures is slow, but verifying aggregated ones is relatively fast.

(Coming to Substrate soonish.)

BLS Signatures

Allows multiple signatures generated under multiple public keys for multiple messages to be aggregated into a single signature.

- Uses heavier pairing friendly elliptic curves than ECDSA/Schnorr.

- Very popular for consensus.

BLS Signatures

However...

- DKGs remain tricky (for threshold).

- Soft key derivations are typically insecure for BLS.

- Verifiers are hundreds of times slower than Schnorr, due to using pairings, for a single signature.

- But for hundreds or thousands of signatures on the same message, aggregated signature verification can be much faster than Schnorr.

Schnorr and BLS Summary

Schnorr & BLS multi-signatures avoid complicating verifier logic,

but introduce user experience costs such as:

- DKG protocols

- Reduced key derivation ability

- Verification speed

Ring Signatures

- Ring signatures prove the signer lies within some "anonymity set" of signing keys, but hide which key actually signed.

- Ring signatures come in many sizes, with many ways of presenting their anonymity sets.

- Anonymous blockchain transactions typically employ ring signatures (Monero, ZCash).

Notes:

- ZCash uses a ring signature based upon Groth16 zkSNARKs which makes the entire chain history be the anonymity set.

- Monero uses ring signatures with smaller signer sets.

- Ring signatures trade some non-repudation for privacy.

Questions

Hash Based Data Structures

How to use the slides - Full screen (new tab)

Hash Based Data Structures

Comparison to

Pointer Based Data Structures

- A hash references the content of some data;

- A pointer tells you where to find it;

- We can not have cycles of hashes.

Hash Chains

A hash chain is a linked list using hashes to connect nodes.

Notes:

Each block has the hash of the previous one.

Merkle Trees

A binary Merkle tree is a binary tree using hashes to connect nodes.

Notes:

Ralph Merkle is a Berkeley alum!

Proofs

- The root or head hash is a commitment to the entire data structure.

- Generate a proof by expanding some but not all hashes.

Crucial for the trustless nature of decentralised cryptographic data systems!

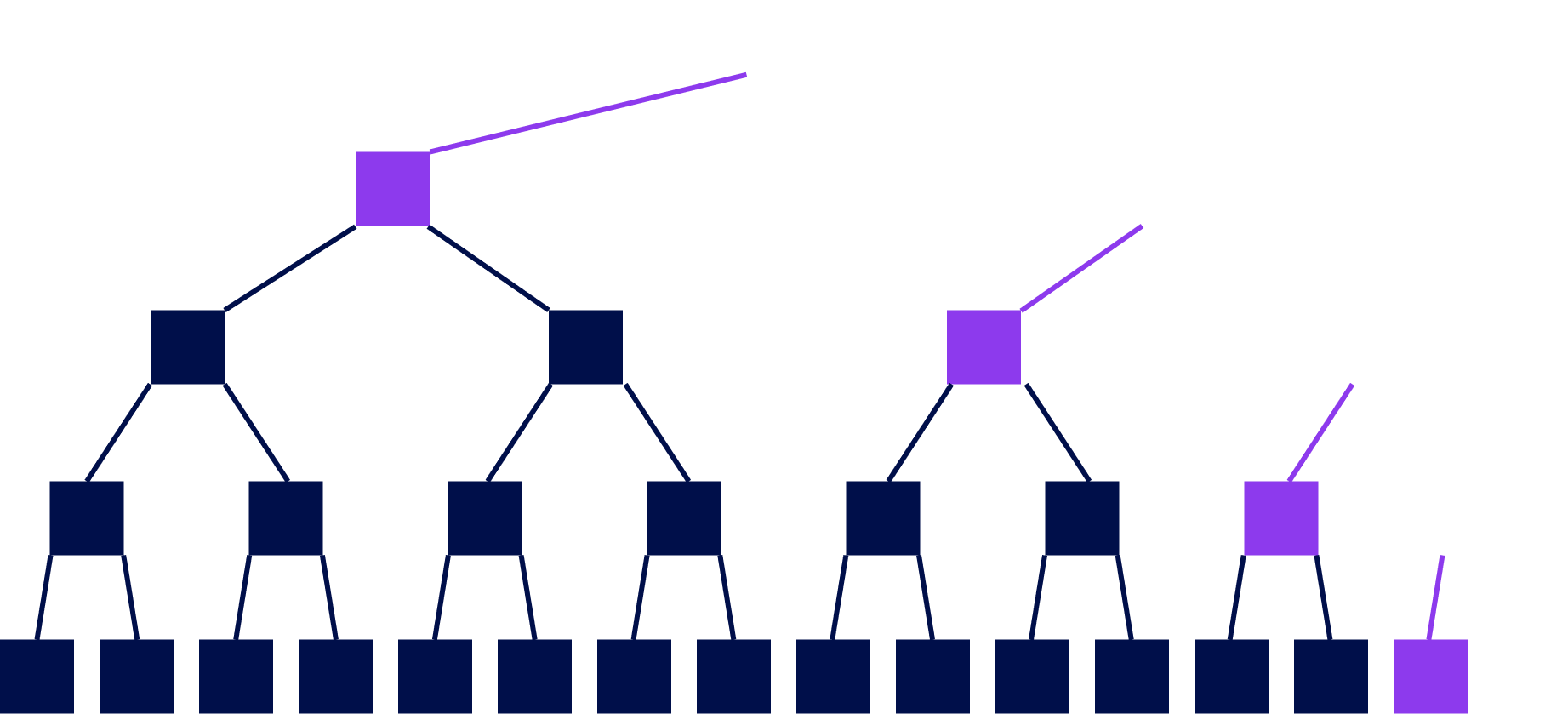

Proofs: Merkle Copaths

Notes:

Given the children of a node, we can compute a node Given the purple nodes and the white leaf, we can compute the white nodes bottom to top. If we compute the correct root, this proves that the leaf was in the tree

Security

Collision resistance: we reasonably assume only one preimage for each hash,

therefore making the data structure's linkage persistent and enduring (until the cryptography becomes compromised 😥).

Notes:

Explain what could happen when this fails.

Proof Sizes

Proof of a leaf has size $O(\log n)$

and so do proofs of updates of a leaf

Key-Value Databases and Tries

Key-value database

The data structure stores a map key -> value.

We should be able to:

put(key, value)get(key)delete(key)

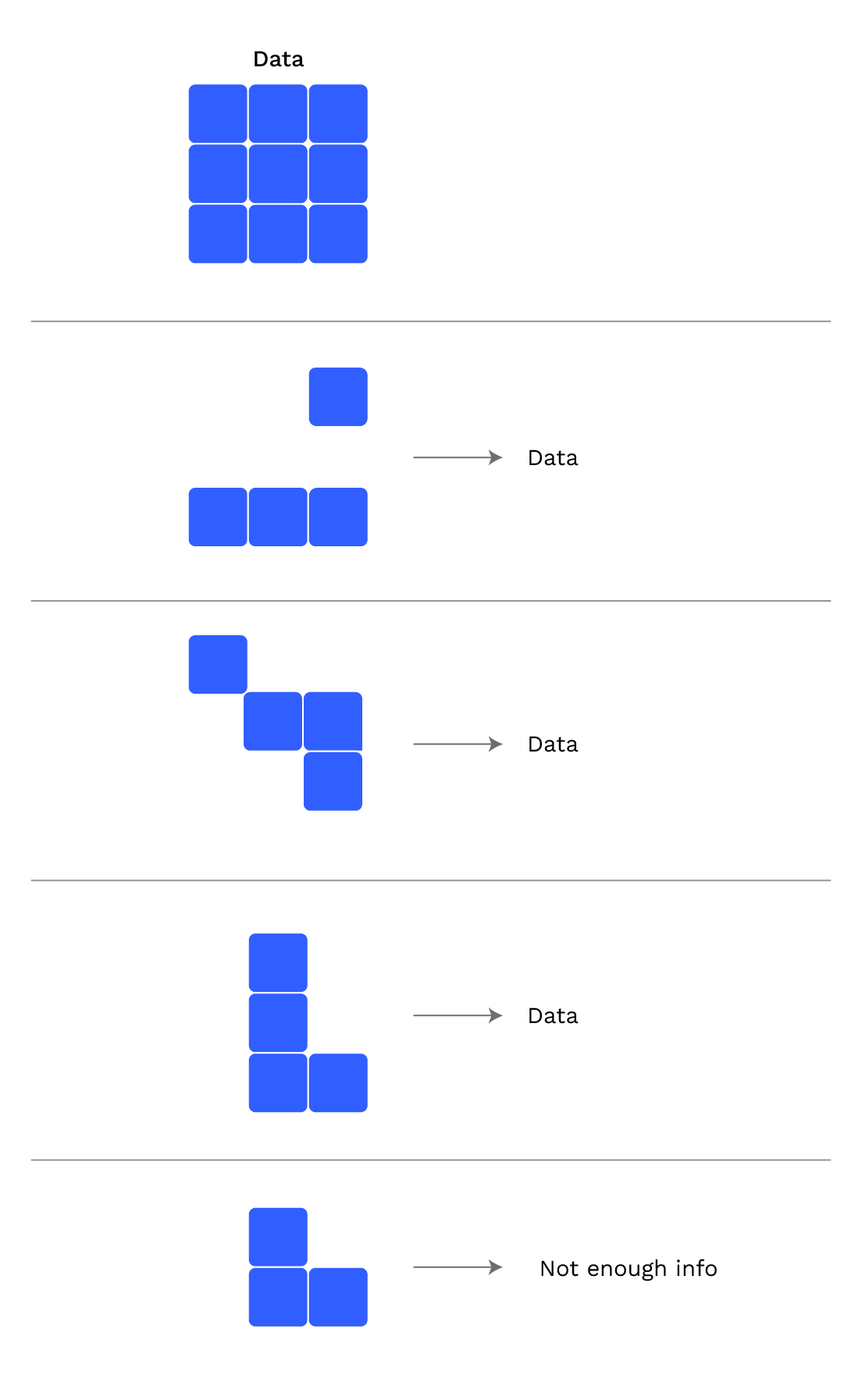

Provability in key-value databases

We should also be able to perform the following operations for a provable key-value database:

- For any key, if

<key,value>is in the database, we can prove it. - If no value is associated to a key, we need to be able to prove that as well.

Types of Data Structures

- Trees are rooted, directed acyclic graphs where each child has only one parent.

- Merkle Trees are trees which use hashes as links.

- Tries are a particular class of trees where:

- Given a particular piece of data, it will always be on a particular path.

- Radix Tries are a particular class of a trie where:

- The location of a value is determined the path constructed one digit at a time.

- Patricia Tries are radix tries which are optimized to ensure lonely node-paths are consolidated into a single node.

Notes:

Just a selection we'll cover in this course.

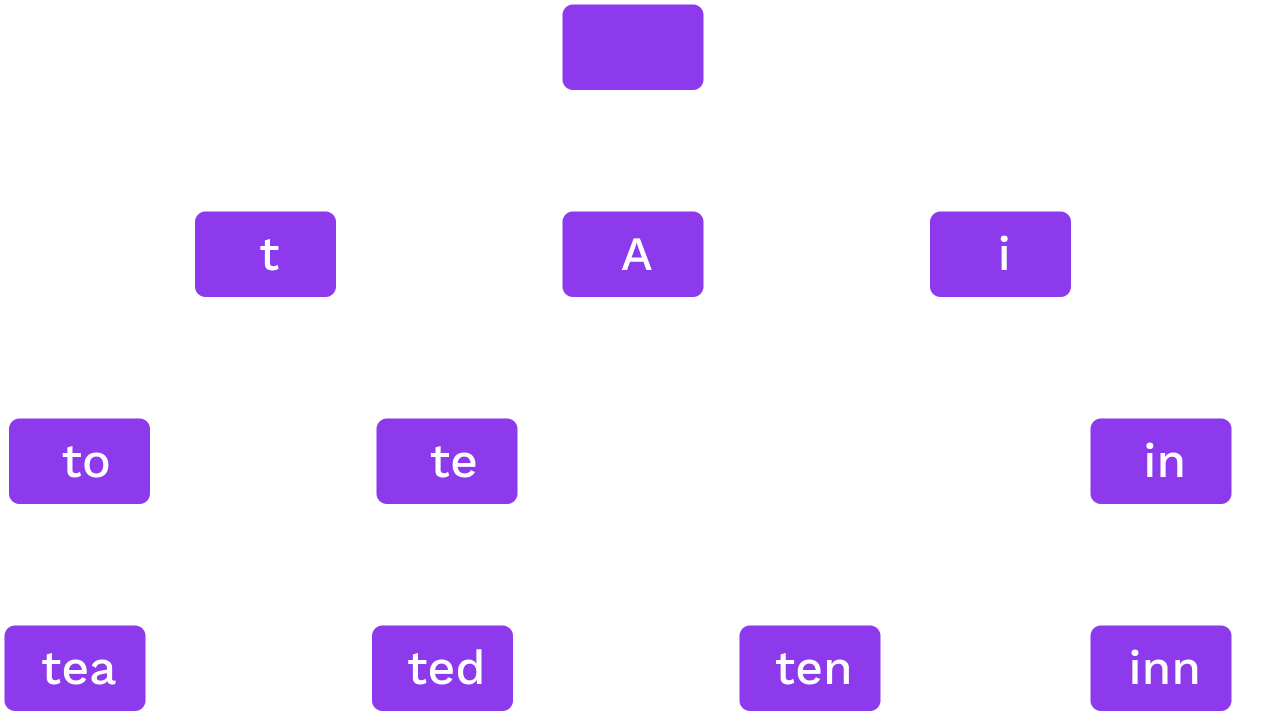

Radix Trie

Words: to, tea, ted, ten, inn, A.

Each node splits on the next digit in base $r$

Notes:

In this image, $r$ is 52 (26 lowercase + 26 uppercase).

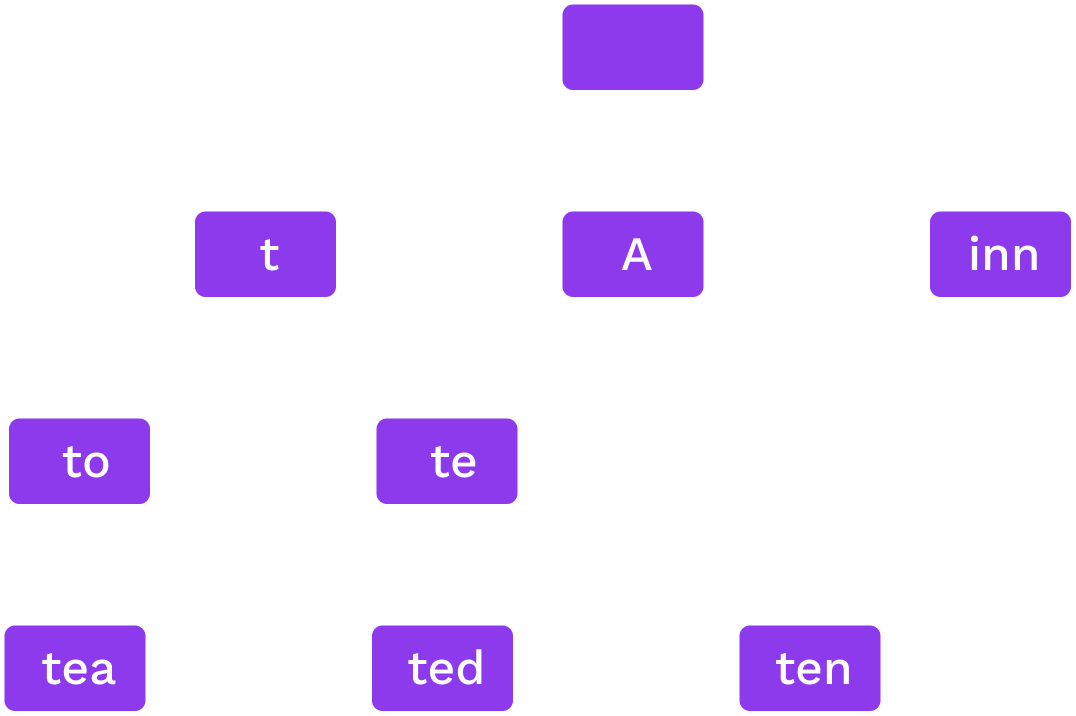

Patricia Trie

Words: to, tea, ted, ten, inn, A.

If only one option for a sequence we merge them.

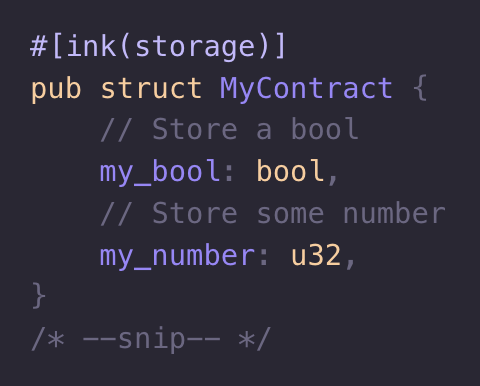

Patricia Trie Structures

#![allow(unused)] fn main() { pub enum Node { Leaf { partial_path: Slice<RADIX>, value: Value }, Branch { partial_path: Slice<RADIX>, children: [Option<Hash>; RADIX], value: Option<Value>, }, } }

Notes:

The current implementation actually makes use of dedicated "extension" nodes instead of branch nodes that hold a partial path. There's a good explanation of them here.

Additionally, if the size of a value is particularly large, it is replaced with the hash of its value.

Hash Trie

- Inserting arbitrary (or worse, user-determined) keys into the Patricia tree can lead to highly unbalanced branches, enlarging proof-sizes and lookup times.

- Solution: pre-hash the data before inserting it to make keys random.

- Resistance against partial collision is important.

- Could be a Merkle trie or regular.

Computational and Storage

Trade-offs

What radix $r$ is best?

- Proof size of a leaf is $r \log_r n$

- $r=2$ gives the smallest proof for one leaf

...but:

- Higher branching at high levels of the tree can give smaller batch proofs.

- For storage, it is best to read consecutive data so high $r$ is better.

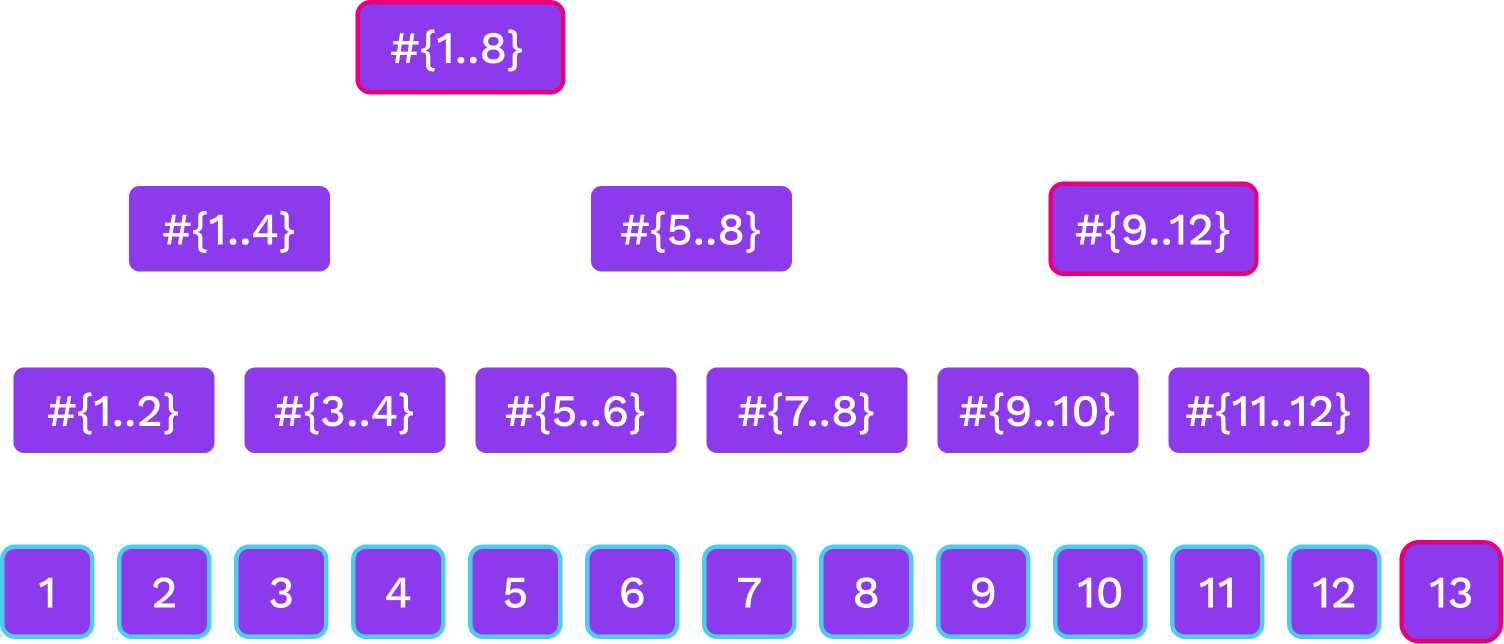

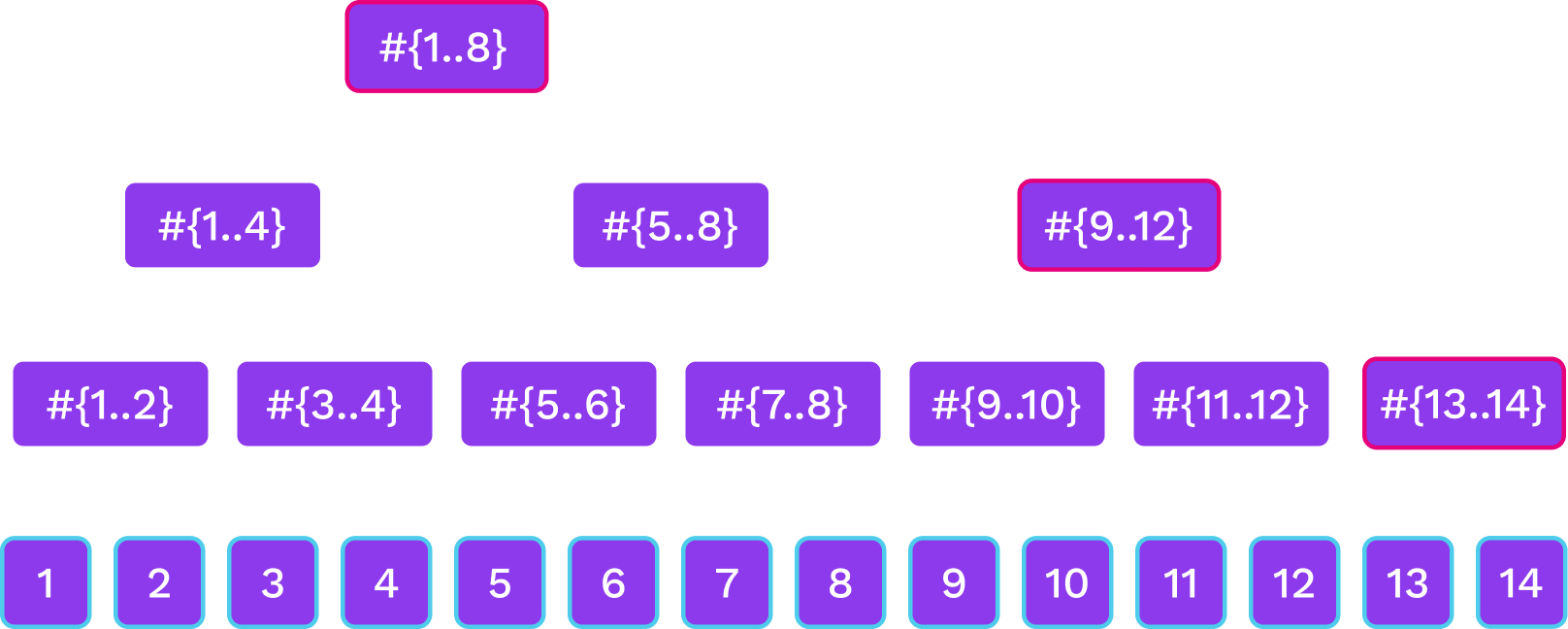

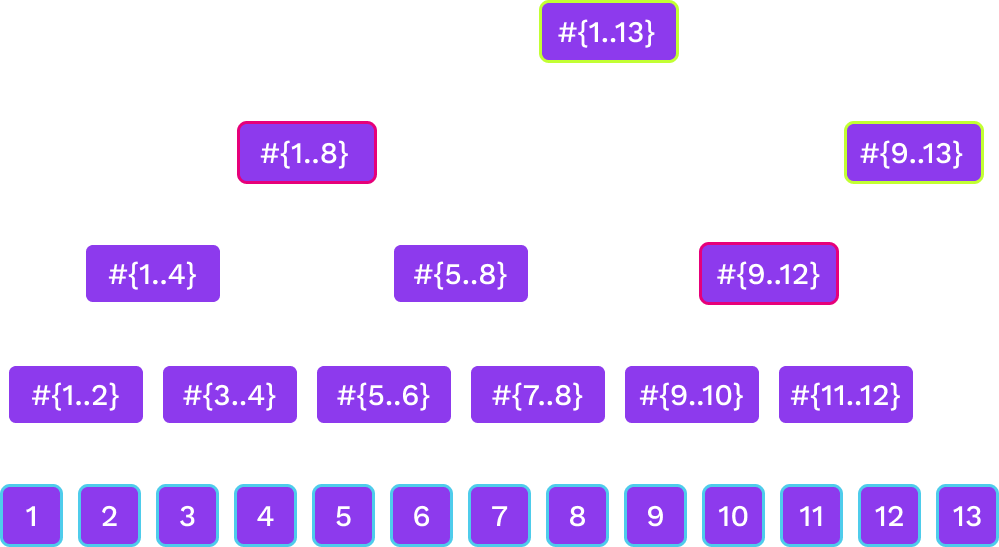

Merkle Mountain Ranges

- Efficient proofs and updates for a hash chain

- Append only data structure

- Lookup elements by number

Merkle Mountain Ranges

Notes:

we have several Merkle trees of sizes that are powers of two. The trees that are here correspond to the binary digits of 13 that are 1.

Merkle Mountain Ranges

Merkle Mountain Ranges

Notes:

- Not as balanced as a binary tree but close

- Can update the peak nodes alone on-chain

Questions

Exotic Primitives

How to use the slides - Full screen (new tab)

Exotic Primitives

Outline

Verifiable Random Functions

(VRFs)

-

Used to obtain private randomness, that is publicly verifiable

-

A variation on a signature scheme:

- still have private/public key pairs, input as message

- in addition to signature, we get an output

VRF Interface

-

sign(sk, input) -> signature -

verify(pk, signature) -> option output -

eval(sk,input) -> output

Notes:

The output of verification being an option represents the possibility of an invalid signature

VRF Output properties

- Output is a deterministic function of key and input

- i.e. eval should be deterministic

- It should be pseudo-random

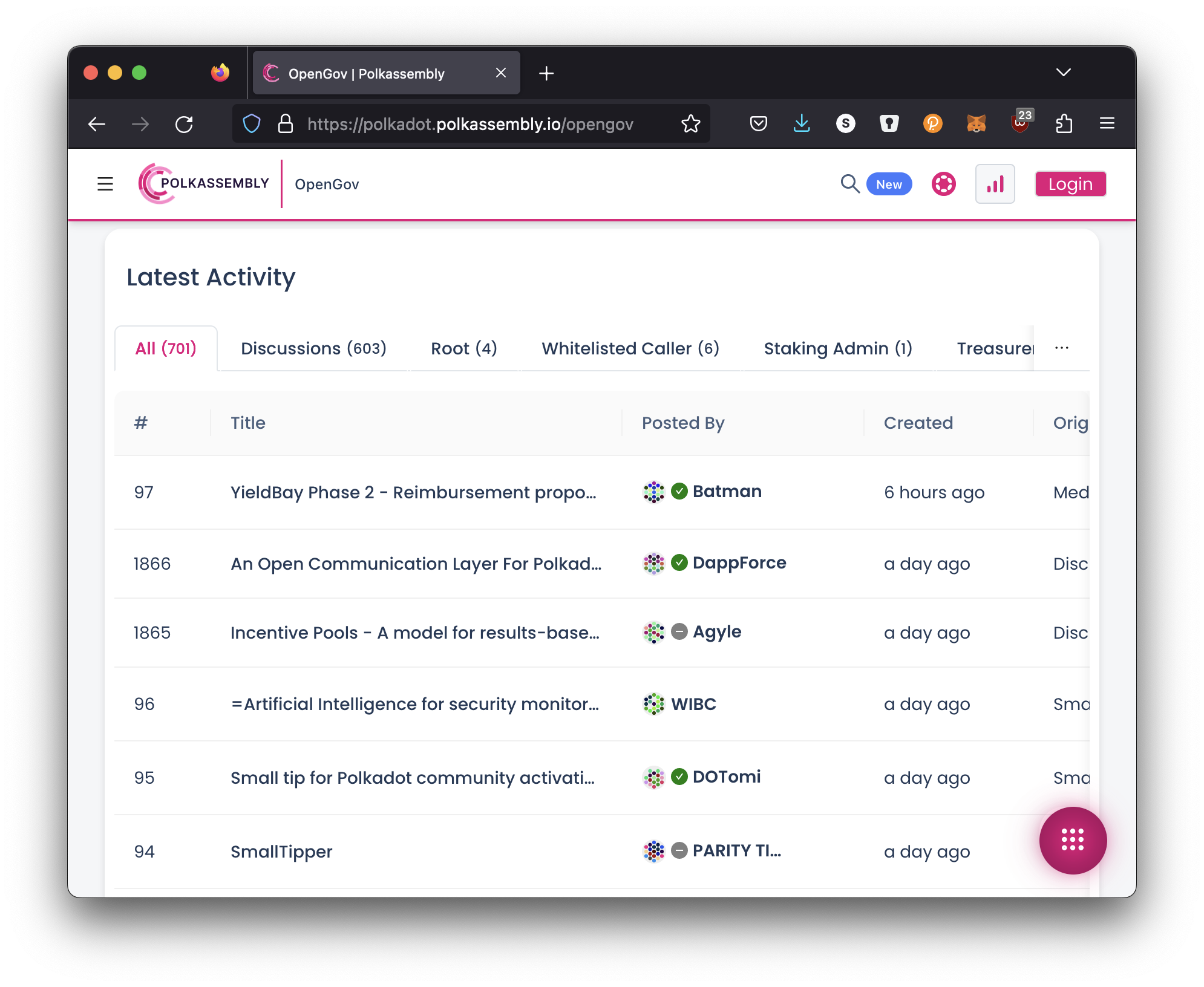

- But until the VRF is revealed, only the holder